Recently, the Artificial Intelligence research organization Artificial Analysis has launched a new initiative called "Artificial Analysis Text to Image Leaderboard & Arena," aimed at comprehensively evaluating the performance of these models.

Overview of the Evaluation Platform

Since the introduction of diffusion-based image generators two years ago, AI image models have reached photo-realistic quality. The Artificial Analysis Text to Image Leaderboard & Arena is dedicated to comparing open-source and proprietary image generation models, determining their effectiveness and accuracy based on human preferences.

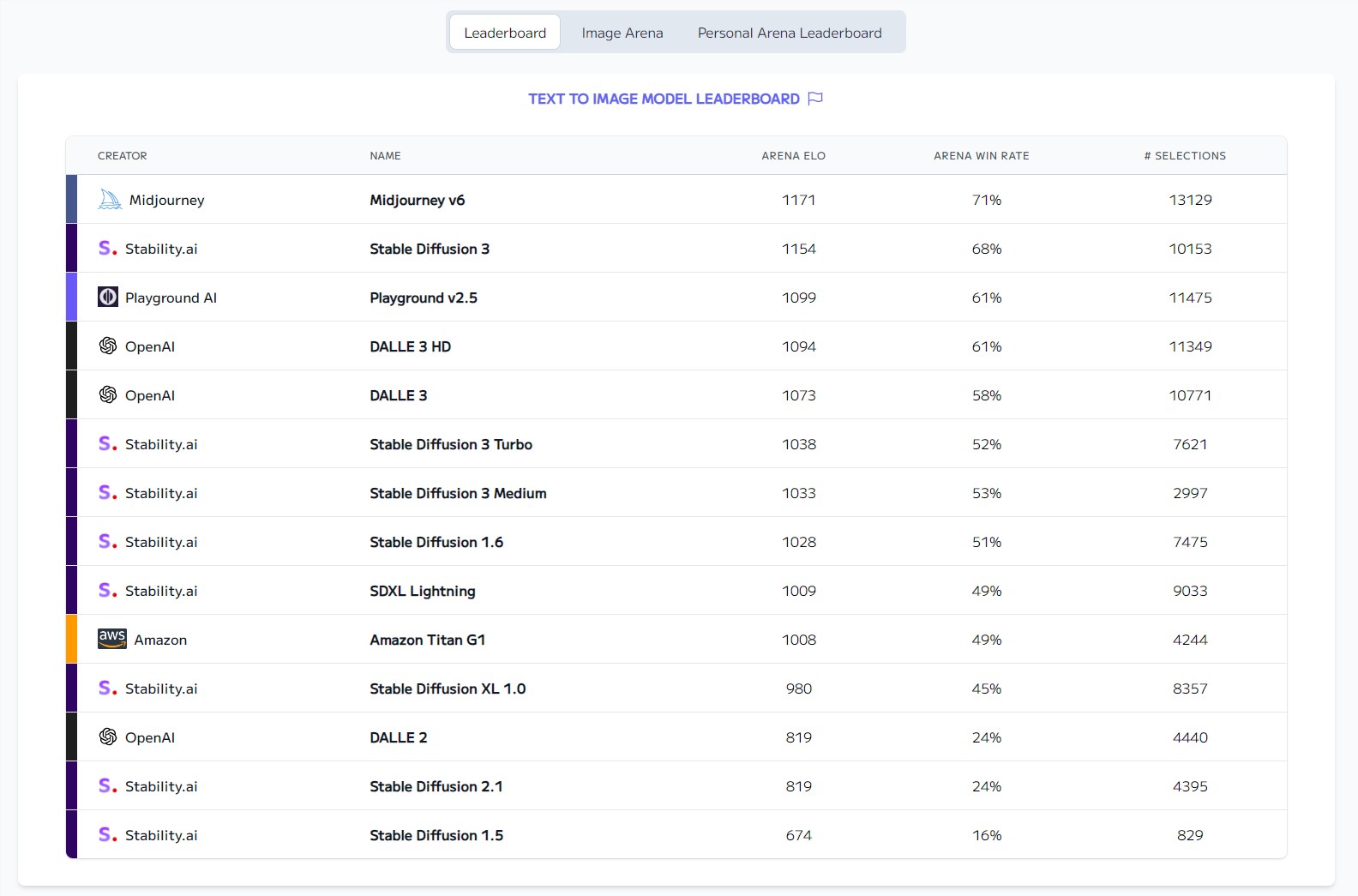

The platform's leaderboard is updated using the ELO rating system, based on over 45,000 human image preferences collected through the Artificial Analysis Image Arena. The evaluation covers several leading image models, including Midjourney, OpenAI's DALL·E, Stable Diffusion, and Playground AI.

Evaluation Methodology

The platform collects large-scale human preference data through crowdsourcing. Participants are shown a prompt and two generated images, then select the one that best matches the prompt. Each model generates over 700 images covering various styles and categories, such as portraits, groups, animals, nature, and art. The collected preference data is used to calculate each model's ELO score, forming the comparative ranking.

Preliminary Insights

The leaderboard shows that while proprietary models lead in performance, open-source alternatives are becoming increasingly competitive. Models like Midjourney, Stable Diffusion 3, and DALL·E 3 HD top the list, while the open-source model Playground AI v2.5 has made significant progress, surpassing OpenAI's DALL·E 3.

Notably, the landscape of image generation models is rapidly changing. For example, last year's leader, DALL·E 2, is now selected in less than 25% of the arena, falling to the lowest ranks.

Public Participation

Artificial Analysis encourages public participation in this evaluation. Users can access the leaderboard on Hugging Face and participate in the ranking process through Image Arena. After completing 30 image selections, participants can view personalized model rankings, gaining insights tailored to their preferences.

This initiative marks a significant step towards understanding and improving AI image generation models. By leveraging human preferences and rigorous crowdsourcing methods, the platform provides valuable insights into the comparative performance of leading image models. As the field continues to evolve, such platforms will play a crucial role in guiding the future development and innovation of AI-driven image generation.

Leaderboard link: https://huggingface.co/spaces/ArtificialAnalysis/Text-to-Image-Leaderboard