Alibaba, a leading Chinese tech giant, has topped the global open-source large model rankings with its latest open-source release, Qwen-2-72B, an instruction-tuned version.

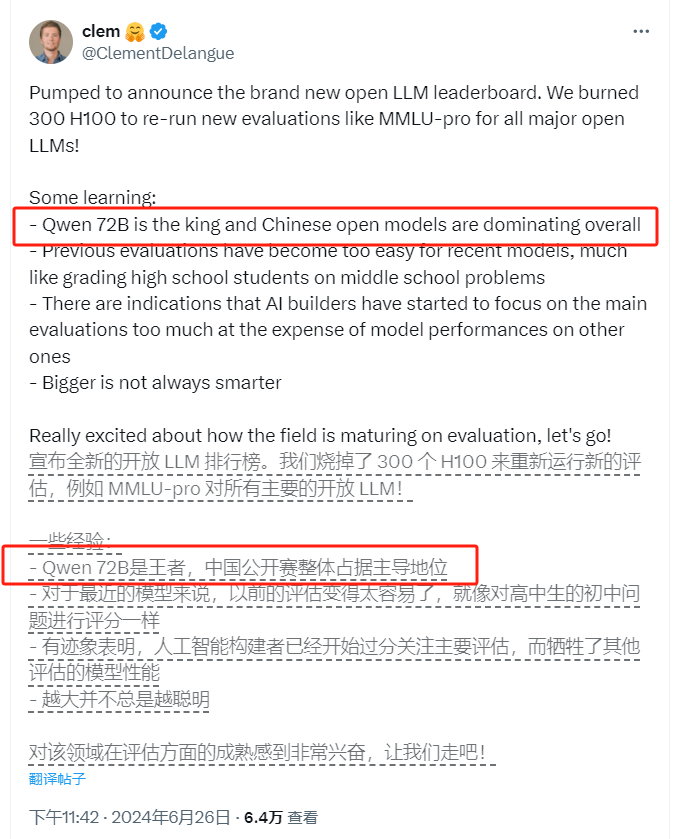

According to the re-evaluation data from the renowned global open-source platform huggingface, Qwen-2 outperformed Meta's Llama-3 and France's Mistralai's Mixtral, showcasing China's leadership in the open-source large model field.

The re-evaluation of the rankings was aimed at providing a more objective assessment, with increased difficulty in the evaluation process designed to reveal the true capabilities of each model. Data shows that Qwen-2 ranks first, followed by Llama-3-70B, an instruction-tuned version, in second place, and Mixtral-8x22B, another instruction-tuned version, in fourth. Additionally, Microsoft's Phi-3-Medium-4K14B model ranks fifth, demonstrating the potential of smaller parameter models.

It is noteworthy that China's Zero-One-Wanwu's Yi-1.5-34B-Chat version ranks sixth, and Cohere's open-source Command R+104B model ranks seventh. Overall, Chinese open-source models occupy four spots in the top ten of the rankings, demonstrating strong competitiveness.

The ranking results have garnered attention and discussion among industry insiders. Experts say that China's competitiveness in the open-source large model field has been evident for some time, and Qwen-2's performance is particularly impressive. When compared with internationally renowned closed-source large model platforms, Qwen-2 has also shown remarkable strength, becoming the only domestic company to enter the top ten in the U.S. evaluation criteria.

Key Points:

⭐ Alibaba's Qwen-2-72B instruction-tuned version tops the global open-source large model rankings

⭐ Chinese open-source models occupy four spots in the top ten, maintaining a leading position

⭐ Qwen-2 demonstrates strong competitive capabilities against international closed-source large model platforms