In the field of artificial intelligence, code generation and review have long been crucial battlegrounds for technological advancement. OpenAI has recently introduced a model based on GPT-4, dubbed CriticGPT, specifically designed to scrutinize code generated by ChatGPT and identify errors within it. The introduction of this innovative tool marks a significant step forward in AI's self-supervision and error detection capabilities.

Despite the notable achievements of large language models (LLMs) like ChatGPT in code generation, there remains uncertainty regarding the quality and correctness of their outputs. The advent of CriticGPT aims to address this shortfall. It assists human experts in more accurately assessing code by generating natural language comments, significantly enhancing the ability and efficiency of error detection.

Exceptional Performance in Error Detection

CriticGPT excels in identifying errors in code, whether they are grammatical, logical, or security-related. Research shows that the number of errors detected by CriticGPT even surpasses that of human evaluators, which is revolutionary in the field of code review.

Reducing Bias and Enhancing Collaboration Efficiency

CriticGPT also makes significant contributions in reducing hallucination errors. By collaborating with human experts, it can significantly reduce bias in error detection while maintaining high efficiency in error identification. This "human-machine collaborative team" approach offers a new perspective for error detection.

Key Features of CriticGPT

Error Detection: CriticGPT thoroughly analyzes code, identifies and reports various errors, while avoiding hallucination errors.

Critical Comment Generation: Provides detailed error analysis and improvement suggestions, helping teams to deeply understand and resolve issues.

Enhanced Training Effectiveness: Collaborates with human trainers to improve the quality and coverage of comments.

Reduction of False Errors: Employs a forced sampling beam search strategy to reduce unnecessary error labeling.

Model Training and Optimization: Continuously optimizes CriticGPT's performance through RLHF training.

Precise Search and Evaluation: Balances issue detection with false positives, providing accurate error reports.

Enhanced Human-AI Collaboration: Acts as an auxiliary tool to improve evaluation efficiency and accuracy.

Technical Approach and Experimental Results

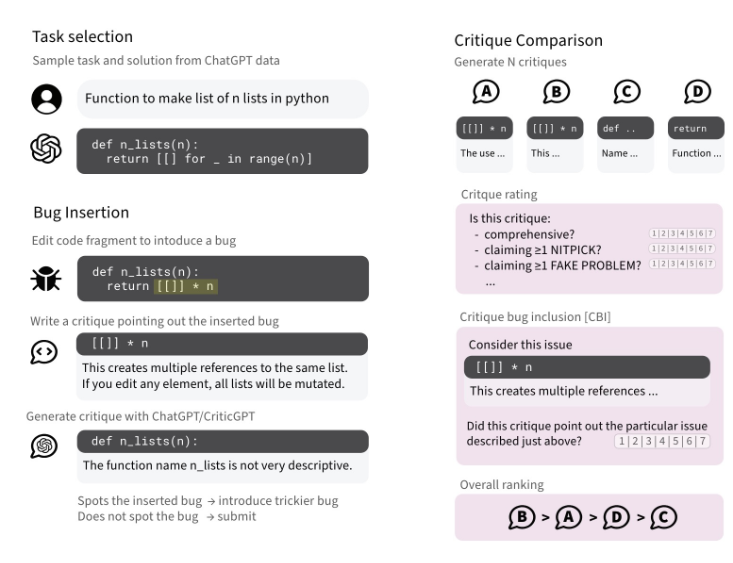

CriticGPT is trained through reinforcement learning from human feedback, focusing on handling inputs containing errors. Researchers trained CriticGPT by intentionally inserting errors into code and providing feedback. Experimental results indicate that CriticGPT is more favored by trainers when providing criticisms, offering higher quality critiques that are more effective in identifying and resolving issues.

The introduction of this technology not only enhances the accuracy of code review but also opens up new possibilities for AI's self-supervision and continuous learning. With ongoing optimization and application of CriticGPT, we have reason to believe it will play a significant role in improving code quality and driving technological progress.

Paper: https://cdn.openai.com/llm-critics-help-catch-llm-bugs-paper.pdf