Recently, Sun Yat-sen University and the BytePlus Digital Human Team made a big splash by proposing a virtual try-on framework called MMTryon. This isn't just any technology; with just a few images of clothes and a few instructions on how to wear them, you can generate a model wearing the clothes with a single click, and the quality is exceptionally high.

Imagine selecting a coat, a pair of pants, and a bag, and then "wham!" they automatically appear on the human figure. Whether you're a real person or a comic character, it's a breeze with this incredibly cool feature!

But the prowess of MMTryon doesn't stop there. It utilizes a vast amount of data to design a clothing encoder in single-image dressing, capable of handling various complex dressing scenarios and any type of clothing style. For combined dressing, it breaks the traditional algorithm's reliance on detailed clothing segmentation, achieving both realistic and natural results with just a single text instruction.

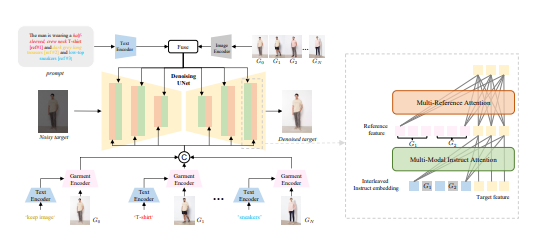

In benchmark tests, MMTryon directly achieved the new SOTA, and this achievement is not to be underestimated. The research team also developed a multi-modal multi-reference attention mechanism, making the dressing effect more precise and flexible. Previous virtual try-on solutions could only accommodate single pieces or had no control over dressing style. Now, MMTryon resolves all these issues.

And MMTryon is particularly clever. It uses a richly expressive clothing encoder, combined with a novel and scalable data generation process, to achieve high-quality virtual dressing without any segmentation, directly through text and multiple try-on objects.

Extensive experiments on open datasets and complex scenarios have proven that MMTryon outperforms existing SOTA methods in both qualitative and quantitative aspects. The research team has also pre-trained a clothing encoder that uses text as a query to activate the features corresponding to the text region, freeing itself from the dependency on clothing segmentation.

Even more impressive, to train combined dressing, the research team proposed a data augmentation pattern based on a large-scale model, constructing a 100w enhanced dataset to ensure that MMTryon can achieve realistic virtual try-on effects in all types of dressing.

MMTryon is like a black tech sensation in the fashion industry, not only helping you try on clothes with a single click but also serving as a fashion dressing auxiliary design to help you choose clothes. MMTryon surpasses other baseline models in both quantitative metrics and human evaluation, delivering top-notch results.

Paper link: https://arxiv.org/abs/2405.00448