DeepSeek AI, a prominent Chinese artificial intelligence research lab, has achieved another significant breakthrough in the field of large language models (LLMs), following its powerful open-source language model, DeepSeek-R1. Recently, DeepSeek AI officially launched a novel technique called Self-Principled Critique Tuning (SPCT), aimed at building more general-purpose and scalable AI reward models (RMs). This technology promises to significantly enhance AI's comprehension and response capabilities in open-ended tasks and complex environments, paving the way for more intelligent AI applications.

Background: Reward Models—The "Guiding Light" of Reinforcement Learning

Reinforcement learning (RL) has become a crucial technology in developing advanced LLMs. RL guides model fine-tuning through feedback signals, enabling it to generate higher-quality responses. In this process, reward models play a vital role, acting like a "judge" that evaluates the LLM's output and assigns scores or "rewards." These reward signals effectively guide the RL process, prompting the LLM to learn to produce more useful content.

However, current reward models face numerous limitations. They often excel in narrow domains with clear rules or easily verifiable answers. For example, the excellent performance of models like DeepSeek-R1 on math and programming problems benefits from the clearly defined "correct answers" in these areas. However, building an effective reward model for complex, open-ended, or subjective general-domain queries remains a significant challenge. DeepSeek AI researchers noted in their paper: "General-purpose reward models need to generate high-quality rewards beyond specific domains, where reward criteria are more diverse and complex, and often lack clear references or standard answers."

SPCT: Addressing Four Challenges to Build General-Purpose Reward Models

To overcome the limitations of existing reward models, DeepSeek AI researchers proposed the innovative SPCT technique. They highlighted four key challenges in building general-purpose reward models:

- Input flexibility: Reward models must handle various input types and simultaneously evaluate one or more responses.

- Accuracy: In diverse, complex, and often ambiguous domains, reward models must generate accurate reward signals.

- Inference-time scalability: Reward models should produce higher-quality rewards when allocated more computational resources for inference.

- Learning scalable behaviors: To enable efficient scalability at inference time, reward models need to learn behaviors that improve performance with increased computational resources.

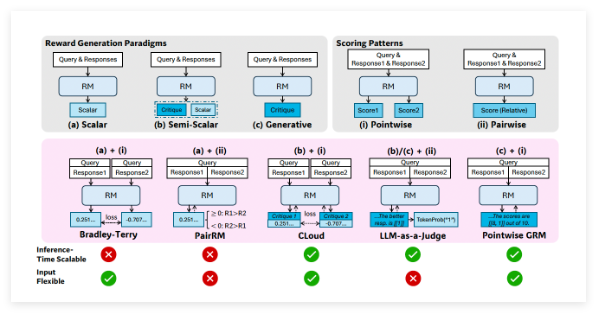

Researchers noted that pointwise generative reward modeling (GRM), where the model generates textual critiques and derives scores from them, can provide the flexibility and scalability needed for general tasks. Preliminary experiments by the DeepSeek team on models like GPT-4o and Gemma-2-27B showed that "certain principles can guide generative reward models to generate rewards within appropriate standards, thus improving reward quality," inspiring them to achieve inference-time scalability by scaling the generation of high-quality principles and accurate critiques.

The Core Mechanism of SPCT: Self-Principled Critique and Tuning

Based on these findings, the DeepSeek team developed SPCT, which trains a GRM to dynamically generate principles and critiques based on the query and response. The researchers argue that principles should be "part of reward generation, not a preprocessing step." This allows the GRM to generate principles on the fly based on the task it's evaluating and then generate critiques based on those principles.

SPCT comprises two main phases:

- Rejective fine-tuning: This phase trains the GRM to generate principles and critiques in the correct format for various input types. The model generates principles, critiques, and rewards for a given query/response. Only trajectories where the predicted reward aligns with the ground truth (e.g., correctly identifying a better response) are accepted; otherwise, they are rejected. This process is repeated, and the model is fine-tuned on the filtered examples to improve its principle/critique generation capabilities.

- Rule-based RL: In this phase, the model undergoes further fine-tuning through outcome-based reinforcement learning. The GRM generates principles and critiques for each query, and the reward signal is calculated based on simple accuracy rules (e.g., whether the known best response was selected). The model is then updated, encouraging the GRM to learn how to dynamically and scalably generate effective principles and accurate critiques.

To address the inference-time scalability challenge, researchers run the GRM multiple times on the same input, generating diverse sets of principles and critiques. The final reward is determined through voting (aggregating sample scores). This allows the model to consider a broader perspective, resulting in more accurate and nuanced final judgments with more resources.

Furthermore, to address potential issues with low-quality or biased generated principles/critiques, researchers introduced a "meta RM"—a separate, lightweight scalar RM specifically designed to predict whether the principles/critiques generated by the main GRM are likely to lead to the correct final reward. During inference, the meta RM evaluates generated samples and filters out low-quality judgments, further enhancing scalability.

DeepSeek-GRM's Superior Performance

Researchers applied SPCT to Google's open-source model Gemma-2-27B, creating DeepSeek-GRM-27B. In multiple benchmark tests, they evaluated it against several strong baseline RMs (including LLM-as-a-Judge, scalar RMs, and semi-scalar RMs) and publicly available models (like GPT-4o and Nemotron-4-340B-Reward). Results showed that DeepSeek-GRM-27B outperformed baseline methods trained on the same data.

Compared to standard fine-tuning, SPCT significantly improved reward quality and, more importantly, inference-time scalability. By scaling inference through generating more samples, DeepSeek-GRM-27B's performance increased dramatically, even surpassing larger models like Nemotron-4-340B-Reward and GPT-4o. The introduction of the meta RM further enhanced scalability, achieving optimal results through judgment filtering. Researchers noted: "With larger-scale sampling, DeepSeek-GRM can make more accurate judgments based on more diverse principles and output more refined rewards." Interestingly, compared to scalar RMs that perform well on verifiable tasks but poorly elsewhere, SPCT exhibited less bias across different domains.

The development of more general-purpose and scalable reward models holds vast potential for enterprise-level AI applications. Potential beneficiary areas include creative tasks and applications where models must adapt to dynamic environments, such as evolving customer preferences.

Despite significant achievements, DeepSeek-GRM still faces challenges in performance on purely verifiable tasks and efficiency compared to non-generative RMs. The DeepSeek team stated that future work will focus on improving efficiency and deeper integration. They concluded: "Future directions may include integrating GRM into online RL processes as a general-purpose interface for reward systems, exploring inference-time co-scaling with policy models, or as a robust offline evaluator for foundation models."

Paper: https://arxiv.org/abs/2504.02495