Researchers from Apple and the Federal Institute of Technology in Lausanne, Switzerland, have jointly open-sourced a large-scale multimodal visual model named 4M-21. Unlike models that are optimized for specific tasks or data types, 4M-21 boasts broad versatility and flexibility. Despite having only 3 billion parameters, it offers a myriad of functionalities including image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, and more.

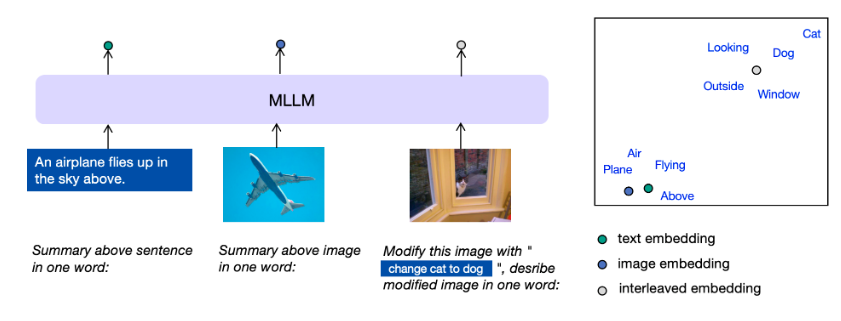

The core technology of the model is the "discrete tokens" conversion technique, which converts various modal data into a unified format of tokens sequences. Whether it's image data, neural network feature maps, vectors, structured data, or data represented as text, it can be transformed into a format that the model can understand. This conversion not only simplifies the training of the model but also lays the foundation for multimodal learning and processing.

Product Access: https://github.com/apple/ml-4m/

In the training phase, 4M-21 completes multimodal learning through the masked modeling approach. It randomly masks part of the input sequence tokens and then predicts the masked parts based on the remaining unmasked tokens. This method compels the model to learn the statistical structure and potential relationships of the input data, capturing the commonalities and interactions between different modalities. Masked modeling not only enhances the model's generalization ability but also improves the accuracy of generative tasks.

Researchers have conducted a comprehensive evaluation of 4M-21 on tasks such as image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, and 3D human pose estimation. The results show that the multimodal processing capabilities of 4M-21 can match the most advanced models currently available, performing excellently across various tasks.

Key Points:

- Apple and the Federal Institute of Technology in Lausanne, Switzerland, have jointly open-sourced the large-scale multimodal visual model 4M-21, which has broad versatility and flexibility.

- 4M-21 offers a range of functionalities including image classification, object detection, semantic segmentation, instance segmentation, depth estimation, surface normal estimation, and more.

- The key technology of 4M-21 is the "discrete tokens" conversion technique, which can convert various modal data into a unified format of tokens sequences.