Recently, Apple's research team and Meta AI researchers have jointly introduced a new technology called LazyLLM, which aims to enhance the efficiency of large language models (LLMs) in long-text reasoning.

As everyone knows, current LLMs often face slow processing speeds when dealing with long prompts, especially during the pre-filling phase. This is primarily due to the modern transformer architecture's computational complexity, which grows quadratically with the number of tokens in the prompt when calculating attention. Therefore, when using the Llama2 model, the computation time for the first token is often 21 times that of subsequent decoding steps, accounting for 23% of the generation time.

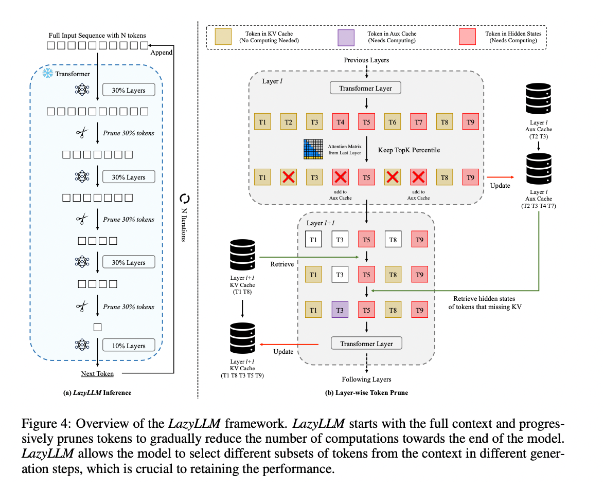

To address this issue, researchers have proposed LazyLLM, a new method that accelerates LLM inference by dynamically selecting important tokens for computation. The core of LazyLLM lies in its ability to assess the importance of each token based on attention scores from previous layers, thereby gradually reducing computational load. Unlike permanent compression, LazyLLM can restore reduced tokens when necessary to ensure model accuracy. Additionally, LazyLLM introduces a mechanism called Aux Cache, which stores the hidden states of pruned tokens, allowing for efficient recovery of these tokens and preventing performance degradation.

LazyLLM excels in inference speed, particularly during the pre-filling and decoding phases. The technology offers three main advantages: it is compatible with any transformer-based LLM, does not require retraining of the model during implementation, and performs effectively across various language tasks. LazyLLM's dynamic pruning strategy allows it to significantly reduce computational load while retaining most important tokens, thereby enhancing generation speed.

Research results show that LazyLLM performs exceptionally well across multiple language tasks, with TTFT speeds increased by 2.89 times (for Llama2) and 4.77 times (for XGen), while maintaining accuracy levels nearly on par with the baseline. Whether for question answering, summarization, or code completion tasks, LazyLLM achieves faster generation speeds and strikes a good balance between performance and speed. Its progressive pruning strategy combined with layer-by-layer analysis lays the foundation for LazyLLM's success.

Paper link: https://arxiv.org/abs/2407.14057

Key points:

🌟 LazyLLM accelerates LLM inference by dynamically selecting important tokens, particularly outstanding in long-text scenarios.

⚡ The technology significantly improves inference speed, with TTFT speeds increased up to 4.77 times, while maintaining high accuracy.

🔧 LazyLLM does not require modifications to existing models and is compatible with any transformer-based LLM, making it easy to implement.