As the buzz around Llama 3.1's open-source release continues to resonate, OpenAI has stepped in to steal the spotlight. Starting now, developers can enjoy free access to 2 million training tokens daily for model fine-tuning until September 23rd. This isn't just a generous offering to developers but also a bold push forward for AI technology advancement.

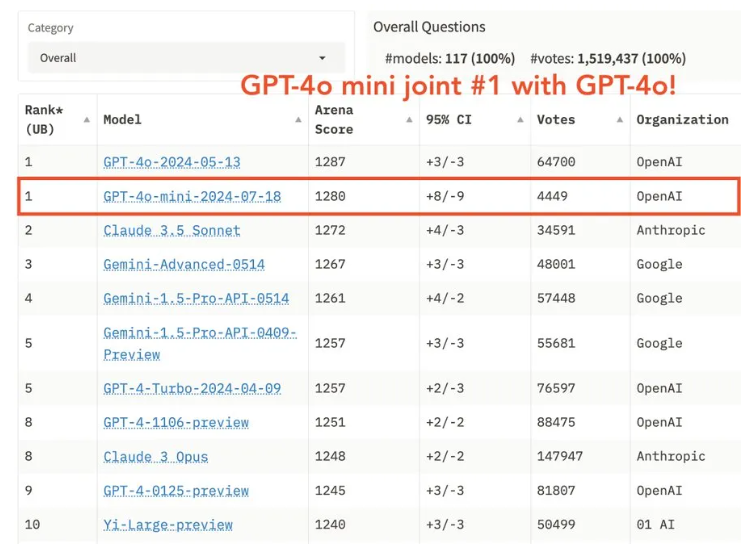

The introduction of GPT-4o mini has left countless developers thrilled. It ranks alongside GPT-4o at the top of the LMSYS leaderboard for large models, boasting robust performance at a mere 1/20th of the cost. OpenAI's move is undoubtedly a significant boon for the AI sector.

Developers who received the email are excitedly spreading the word, eager to take advantage of this generous offer. OpenAI has announced that from July 23rd to September 23rd, developers can use 2 million training tokens for free each day. Any excess will be charged at $3 per million tokens.

More affordable: The input token cost for GPT-4o mini is 90% lower than GPT-3.5 Turbo, and the output token cost is 80% lower. Even after the free period ends, the training cost for GPT-4o mini remains half that of GPT-3.5 Turbo.

Extended context: GPT-4o mini's training context length is 65k tokens, four times that of GPT-3.5 Turbo, and its inference context length is 128k tokens, eight times that of GPT-3.5 Turbo.

Smarter and more capable: GPT-4o mini is smarter than GPT-3.5 Turbo and supports visual capabilities (although current fine-tuning is limited to text).

The fine-tuning feature of GPT-4o mini will be available to enterprise customers and Tier 4 and Tier 5 developers initially, with access gradually expanding to all user levels. OpenAI has released a fine-tuning guide to help developers get started quickly.

Some netizens are skeptical, believing this is OpenAI's way of collecting data to train and improve AI models. Others argue that GPT-4o mini's success is substantial evidence that AI has become smart enough to fool us.

The launch of GPT-4o mini and the free fine-tuning policy will undoubtedly drive further development and democratization of AI technology. For developers, this is a rare opportunity to build more powerful applications at a lower cost. As for AI technology itself, does this mark a new milestone? We will have to wait and see.

Fine-tuning documentation: https://platform.openai.com/docs/guides/fine-tuning/fine-tuning-integrations