In the field of natural language processing, the development of large language models (LLMs) is advancing rapidly, achieving significant progress in multiple domains. However, as the complexity of these models increases, accurately evaluating their outputs becomes crucial. Traditionally, human evaluation has been relied upon, but this method is time-consuming, challenging to scale, and cannot keep pace with the rapid development of models.

To address this issue, the Salesforce AI Research team has introduced SFR-Judge, an evaluation family composed of three large language models, each with 8 billion, 120 billion, and 700 billion parameters, built on Meta Llama3 and Mistral NeMO. SFR-Judge is capable of performing various evaluation tasks, including pairwise comparison, single scoring, and binary classification assessments, aiming to help research teams evaluate new models' performance quickly and efficiently.

Traditional LLM evaluation models often suffer from bias issues such as positional and length biases, which affect their judgments. To overcome these issues, SFR-Judge employs a Direct Preference Optimization (DPO) training method, enabling the model to learn from positive and negative examples, enhancing its understanding of evaluation tasks, reducing biases, and ensuring consistency in judgments.

In testing, SFR-Judge performed excellently on 13 benchmarks, surpassing many existing evaluation models, including some private ones. Notably, on the RewardBench leaderboard, SFR-Judge achieved an accuracy rate of 92.7%, marking the first and second time generative evaluation models have surpassed the 90% threshold, demonstrating its outstanding performance in model evaluation.

SFR-Judge's training method encompasses three different data formats. The first is "Chain of Thought Critique," which helps the model generate structured analyses of evaluation responses. The second is "Standard Judgment," simplifying the evaluation process by directly providing feedback on whether responses meet the standard. Lastly, "Response Derivation" helps the model understand the characteristics of high-quality responses, strengthening its judgment capabilities. The combination of these three data formats significantly enhances SFR-Judge's evaluation capabilities.

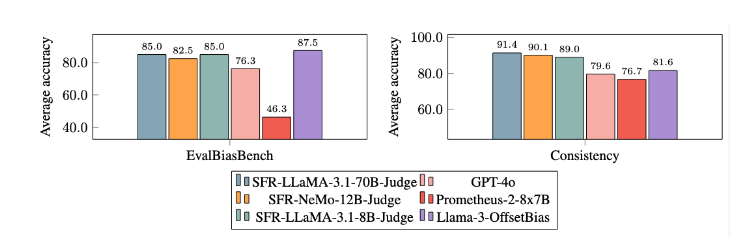

After extensive experiments, the SFR-Judge models outperformed others in reducing biases. On the EvalBiasBench benchmark, they demonstrated high pairwise order consistency, indicating that even with changes in response order, the model's judgments remain stable. This makes SFR-Judge a reliable automated evaluation solution, reducing reliance on manual labeling and providing a more scalable option for model evaluation.

Paper link: https://arxiv.org/abs/2409.14664

Key Points:

📊 High Accuracy: SFR-Judge achieved top results in 10 out of 13 benchmarks, especially reaching a high accuracy rate of 92.7% on RewardBench.

🛡️ Bias Mitigation: The model shows lower biases compared to other evaluation models, particularly in length and positional biases.

🔧 Versatile Applications: SFR-Judge supports pairwise comparison, single scoring, and binary classification evaluations, adapting to various evaluation scenarios.