Cohere has released the most advanced multimodal AI search model, Embed3, which now supports multimodal search. This means users can perform enterprise-level retrievals not only through text but also through images.

Since its launch last year, Embed3 has been continuously optimized, helping businesses convert documents into digital representations. This latest upgrade will enhance its performance in image search.

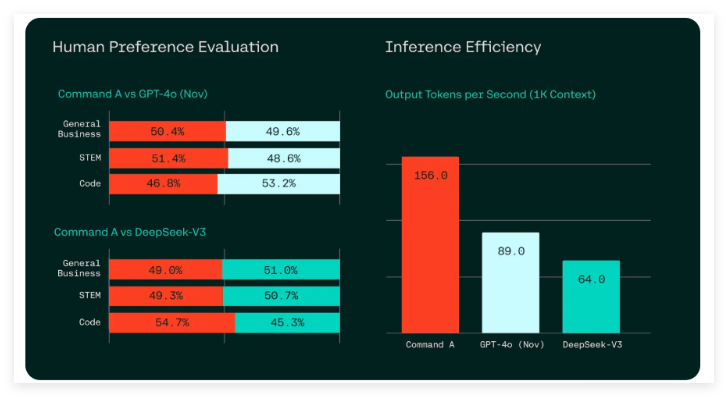

Cohere's co-founder and CEO, Aidan Gonzales, shared performance improvement charts of Embed3 in image search on social media.

In a blog post, Cohere stated that this new feature will help businesses fully leverage the vast amount of data stored in images, enhancing work efficiency. Enterprises can now search for complex reports, product catalogs, and design documents more quickly and accurately.

With the continuous development of multimodal search, Cohere's Embed3 can generate embeddings for both text and images simultaneously. This new embedding method allows users to manage images and text in a unified latent space, rather than storing them separately. This improvement will significantly enhance the quality of search results, avoiding bias towards text data and better understanding the underlying meaning of the data.

Here are some practical use cases of Embed3:

Graphs and Charts: Visual representations are crucial for understanding complex data. Users can now effortlessly find the right charts to inform their business decisions. Simply describe a specific insight, and Embed3 will retrieve relevant graphics and charts, enabling employees across teams to make data-driven decisions more efficiently.

E-commerce Product Catalogs: Traditional search methods typically limit customers to finding products through text-based descriptions. Embed3 transforms this experience. Retailers can build applications that allow searching for products by image, in addition to text descriptions, creating a differentiated experience for shoppers and boosting conversion rates.

Design Files and Templates: Designers often use extensive asset libraries, relying on memory or strict naming conventions to organize visuals. Embed3 makes it simple to find specific UI models, visual templates, and presentation slides based on text descriptions. This streamlines the creative process.

Embed3 supports over 100 languages, meaning it can serve a broader user base. Currently, this multimodal Embed3 is available on Cohere's platform and Amazon SageMaker.

As more users become accustomed to image search, businesses are also catching up with this trend. Cohere's updates give them the opportunity to enjoy a more flexible search experience. Cohere updated its API in September, making it easy for customers to switch from competing models to Cohere's models.

Official Blog: https://cohere.com/blog/multimodal-embed-3

Key Points:

🌟 Embed3 supports multimodal search, allowing users to retrieve information through both images and text.

📈 The updated model significantly enhances image search performance, helping businesses leverage data value.

🔄 Cohere updated its API in September, simplifying the process for customers to switch from other models.