Recently, the research team at Meta AI, in collaboration with researchers from the University of California, Berkeley, and New York University, has introduced a method called Thought Preference Optimization (TPO) aimed at enhancing the response quality of large language models (LLM) that have undergone instruction tuning.

Unlike traditional models that focus solely on the final answer, the TPO method allows the model to internally reflect before generating a response, resulting in more accurate and coherent answers.

This new technique integrates an improved version of the Chain-of-Thought (CoT) reasoning method. During training, the method encourages the model to "think" before responding, helping to construct a more systematic internal thought process. Previous direct CoT prompts sometimes reduced accuracy and were challenging due to the lack of explicit thought steps. TPO overcomes these limitations by allowing the model to optimize and streamline its thought process, without displaying intermediate thought steps to the user.

In the TPO process, the large language model is first prompted to generate multiple thought processes, which are then sampled and evaluated before forming the final response. An evaluation model then scores the outputs, determining the best and worst responses. By using these outputs for direct preference optimization (DPO), this iterative training method enhances the model's ability to generate more relevant and high-quality responses, thereby improving overall performance.

In this method, training prompts are adjusted to encourage the model to internally reflect before responding. The evaluated final responses are scored by an LLM-based evaluation model, allowing the model to improve quality based solely on the effectiveness of the response without considering implicit thought steps. Additionally, TPO uses direct preference optimization to create pairs of implicit thought preferences and rejection responses, further refining the model's internal processes through multiple training cycles.

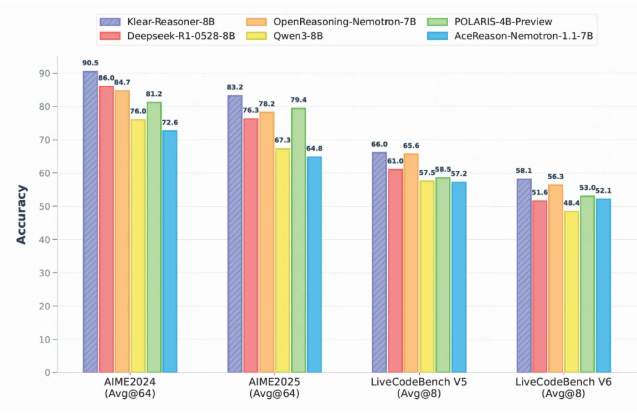

Research results show that the TPO method performs excellently in multiple benchmark tests, surpassing various existing models. This method is not only applicable to logical and mathematical tasks but also demonstrates potential in creative fields such as marketing and health.

Paper: https://arxiv.org/pdf/2410.10630

Key Points:

🧠 TPO technology enhances the reflective capacity of large language models before generating responses, ensuring greater accuracy.

📈 Through improved Chain-of-Thought reasoning, the model can optimize and streamline its internal thought process, enhancing response quality.

💡 TPO is applicable to a variety of fields, not limited to logical and mathematical tasks, but also applicable to creative and health domains.