Recently, the research team released a framework called HelloMeme, which can highly accurately transfer facial expressions from one person in a scene to another person in a different scene.

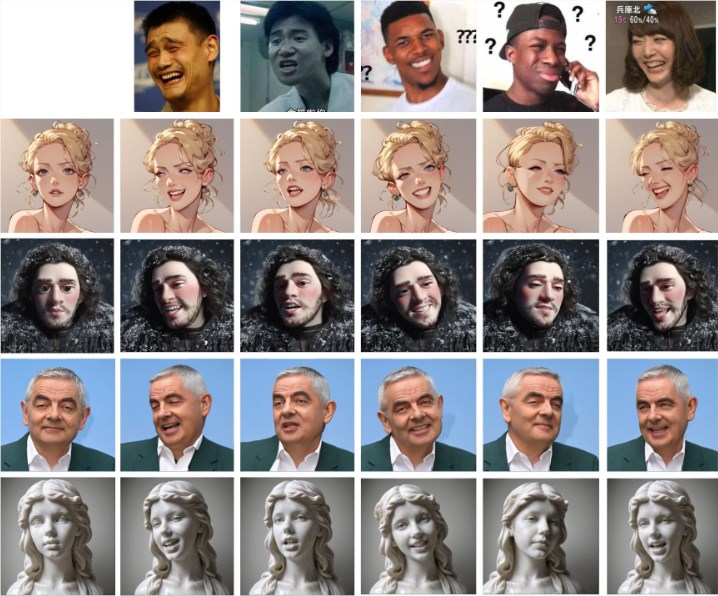

As shown in the image below, by providing an expression image (first row), the detailed expressions can be transferred to other images of people.

The core of HelloMeme lies in its unique network structure. This framework can extract features from each frame of a driving video and input these features into the HMControlModule. Through this process, researchers can generate smooth video footage. However, in the initial generated videos, there were flickering issues between frames, affecting the overall viewing experience. To address this, the team introduced the Animatediff module, which significantly improved video continuity but also slightly reduced fidelity.

To resolve this contradiction, researchers further optimized the Animatediff module, ultimately achieving both enhanced video continuity and high image quality.

Additionally, the HelloMeme framework provides robust support for facial expression editing. By binding ARKit Face Blendshapes, users can easily control the facial expressions of characters in the generated videos. This flexibility allows creators to produce videos with specific emotions and expressions, greatly enriching the expressive power of video content.

In terms of technical compatibility, HelloMeme employs a hot-swappable adapter design based on SD1.5. The greatest advantage of this design is that it does not affect the generalization capability of the T2I (text-to-image) model, allowing any stylized model developed on the basis of SD1.5 to integrate seamlessly with HelloMeme. This provides more possibilities for various creations.

The research team found that the introduction of the HMReferenceModule significantly improved the fidelity conditions during video generation, meaning that high-quality videos can be produced with fewer sampling steps. This discovery not only enhances generation efficiency but also opens new doors for real-time video generation.

Comparative effects with other methods show that HelloMeme's expression transfer is more natural and closer to the original expression.

Key Points:

🌐 HelloMeme achieves both smoothness and high image quality in video generation through its unique network structure and the Animatediff module.

🎭 The framework supports ARKit Face Blendshapes, allowing users to flexibly control character facial expressions and enrich video content.

⚙️ Adopts a hot-swappable adapter design, ensuring compatibility with other models based on SD1.5, providing greater flexibility for creation.