In the era of rapid development of artificial intelligence, the intelligence level of large models continues to improve, but the challenges of efficiency in inference systems have become increasingly apparent. Addressing high inference loads, reducing inference costs, and shortening response times have become important issues that the industry faces together.

Kimi Company, in collaboration with the MADSys laboratory at Tsinghua University, has launched the Mooncake inference system design scheme based on KVCache, which was officially released in June 2024.

The Mooncake inference system significantly enhances inference throughput through an innovative PD separation architecture and a computation-centric approach, attracting widespread industry attention. To further promote the application and popularization of this technological framework, Kimi, together with the MADSys laboratory at Tsinghua University and several companies such as 9#AISoft, Alibaba Cloud, and Huawei Storage, has launched the open-source project Mooncake. On November 28, the technical framework of Mooncake was officially launched on the GitHub platform.

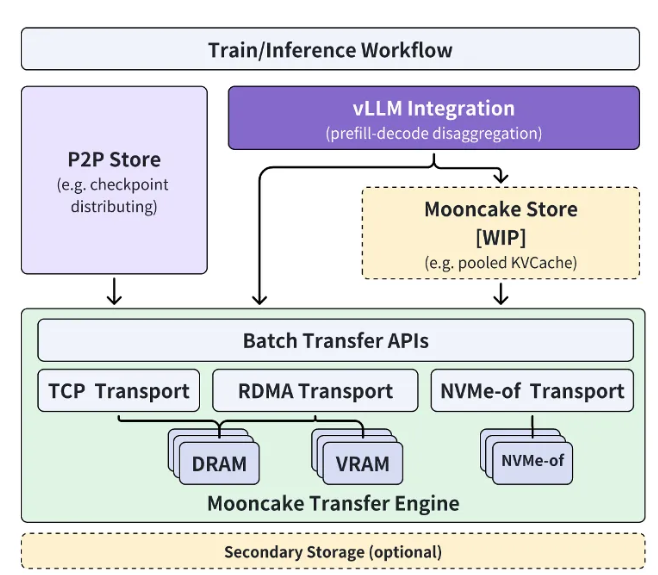

The Mooncake open-source project revolves around a large-scale KVCache pool and aims to gradually open-source the high-performance KVCache multi-level cache Mooncake Store in phases. Additionally, the project will be compatible with various inference engines and underlying storage and transmission resources.

Currently, part of the Transfer Engine has been globally open-sourced on GitHub. The ultimate goal of the Mooncake project is to establish a new standard interface for high-performance memory semantic storage in the era of large models and to provide relevant reference implementation solutions.

Xu Xinran, Vice President of Engineering at Kimi, stated: "Through close cooperation with the MADSys laboratory at Tsinghua University, we have jointly created the separated large model inference architecture Mooncake, achieving extreme optimization of inference resources.

Mooncake not only enhances user experience but also reduces costs, providing effective solutions for processing long texts and high concurrency demands." He looks forward to more companies and research institutions joining the Mooncake project to jointly explore more efficient model inference system architectures, allowing products based on large model technology, such as AI assistants, to benefit a wider audience.

Project link: https://github.com/kvcache-ai/Mooncake

Key points:

🌟 Kimi and Tsinghua University jointly released the Mooncake inference system to enhance AI inference efficiency.

🔧 The Mooncake project has been open-sourced on GitHub, aiming to build a standard interface for high-performance memory semantic storage.

🤝 We look forward to more companies and research institutions participating to jointly promote the advancement of AI technology.