Yuezhian Technology Co., Ltd. and Tsinghua University's MADSys Laboratory jointly released an open-source project called Mooncake, aimed at co-developing a large model inference architecture centered around KVCache. In June 2024, both parties announced the design plan for the Mooncake inference system based on the Kimi framework, which utilizes a separation of PD and a storage-computation architecture, significantly enhancing inference throughput and attracting widespread attention in the industry.

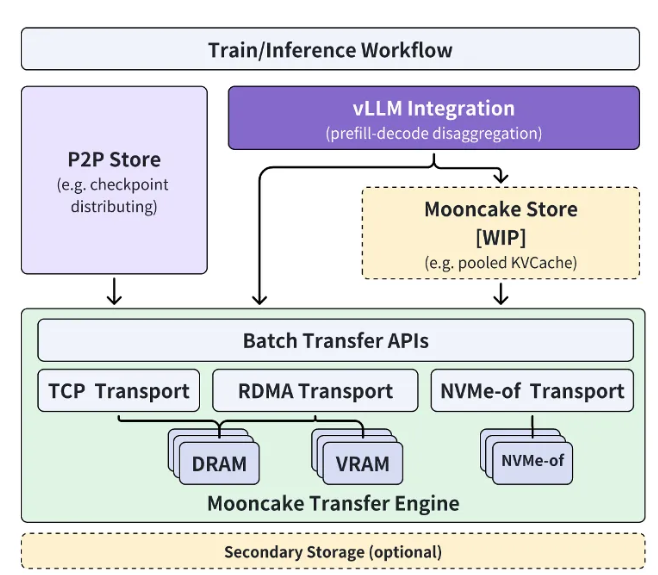

The Mooncake project extends from academic papers and centers around a large-scale KVCache pool. It reduces computational overhead through innovative storage-computation concepts, improving inference throughput. The project adopts a phased open-source approach, gradually releasing the implementation of the high-performance KVCache multi-level cache, Mooncake Store, while ensuring compatibility with various inference engines and underlying storage/transmission resources. Currently, the Transfer Engine component has been globally open-sourced on GitHub.

XU Xinran, Vice President of the Kimi Project, stated that through close collaboration with Tsinghua University's MADSys Laboratory, they have jointly developed the separated large model inference architecture, Mooncake, achieving extreme optimization of inference resources. Mooncake not only enhances the user experience of Kimi and reduces costs but also provides effective solutions for handling long texts and high concurrency demands. The company believes that through open-source collaboration with industry, academia, and research institutions, it can drive the entire industry towards a more efficient inference platform and invites more enterprises and research institutions to join the Mooncake project for co-development, exploring innovative architectures for more efficient and advanced model inference systems, allowing products like AI assistants based on large model technology to benefit a wider audience.

Project Address: https://github.com/kvcache-ai/Mooncake