In the current competition of developing large language models (LLMs), major AI companies are facing increasing challenges, leading to a growing interest in alternatives beyond the "Transformer" architecture. Since its introduction by Google researchers in 2017, the Transformer architecture has become the foundation of today's generative AI. To address this challenge, a startup incubated by MIT, Liquid AI, has launched an innovative framework called STAR (Synthesis of Tailored Architectures).

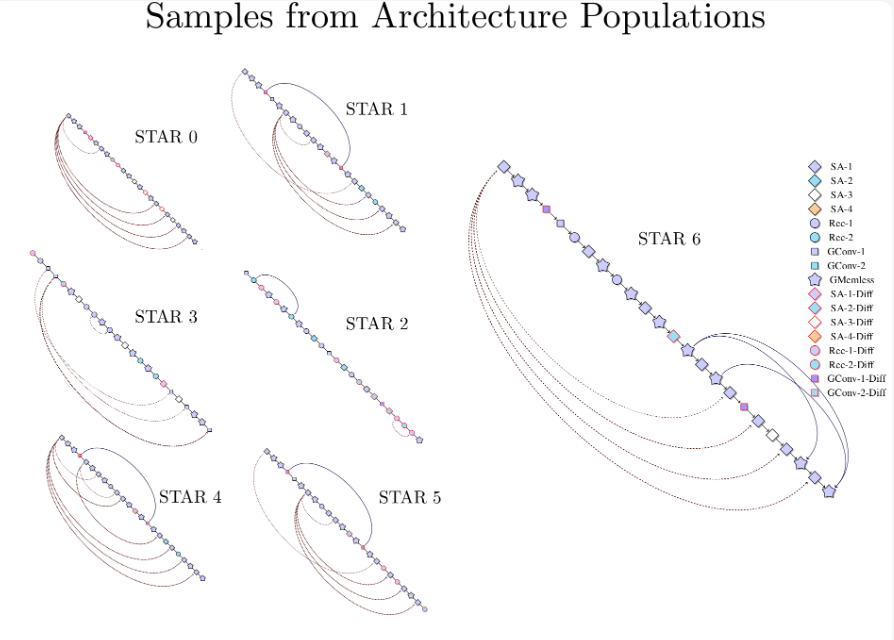

The STAR framework utilizes evolutionary algorithms and numerical encoding systems, aiming to automate the generation and optimization of AI model architectures. Liquid AI's research team notes that STAR's design approach differs from traditional architecture design by employing a hierarchical encoding technique known as the "STAR Genome," which explores a broad design space of potential architectures. Through combinations and mutations of the genome, STAR can synthesize and optimize architectures that meet specific performance and hardware requirements.

In tests focused on autoregressive language modeling, STAR demonstrated superior capabilities compared to traditional optimized Transformer++ and hybrid models. In terms of optimization quality and cache size, the architectures evolved by STAR reduced cache size by up to 37% compared to hybrid models, and achieved a 90% reduction compared to traditional Transformers. This efficiency does not compromise the model's predictive performance; in some cases, it even surpasses competitors.

Research also indicates that STAR's architecture is highly scalable, with a STAR evolutionary model expanding from 125 million parameters to 1 billion parameters performing comparably or better than existing Transformer++ and hybrid models in standard benchmarks, while significantly reducing inference cache requirements.

Liquid AI states that the design principles of STAR incorporate concepts from dynamic systems, signal processing, and numerical linear algebra, creating a flexible search space for computational units. A notable feature of STAR is its modular design, allowing it to encode and optimize architectures at multiple levels, providing researchers with insights into effective combinations of architectural components.

Liquid AI believes that STAR's efficient architecture synthesis capabilities will be applicable across various fields, especially in scenarios that require balancing quality and computational efficiency. While Liquid AI has yet to announce specific commercial deployments or pricing plans, its research results mark a significant advancement in the field of automated architecture design. As the AI field continues to evolve, frameworks like STAR may play a crucial role in shaping the next generation of intelligent systems.

Official blog: https://www.liquid.ai/research/automated-architecture-synthesis-via-targeted-evolution

Key Points:

🌟 The STAR framework launched by Liquid AI automatically generates and optimizes AI model architectures through evolutionary algorithms.

📉 The STAR model reduces cache size by up to 90% while outperforming traditional Transformers in performance.

🔍 The modular design of STAR can be applied across various fields, driving further development in AI system optimization.