An important benchmark in the field of artificial intelligence, ARC-AGI, which stands for "Artificial General Intelligence Abstraction and Reasoning Corpus," is about to achieve breakthrough progress. However, the test's founder, Francois Chollet, warns that despite improvements in scores, this does not mean we are closer to achieving Artificial General Intelligence (AGI). He points out that the test itself has design flaws and that what it reveals does not represent a genuine research breakthrough.

Since Chollet launched ARC-AGI in 2019, AI systems have consistently performed poorly on the test. To date, the best-performing system has only managed to solve less than one-third of the tasks. Chollet attributes this mainly to the current AI research being overly reliant on large language models (LLMs). He notes that while LLMs can recognize patterns when handling large-scale data, they depend on memory rather than reasoning, making it difficult for them to deal with unseen new situations or engage in true "reasoning."

"LLM models rely on extracting patterns from training data rather than performing independent reasoning. They merely 'remember' patterns instead of generating new inferences," Chollet explained in a series of posts on the social platform X.

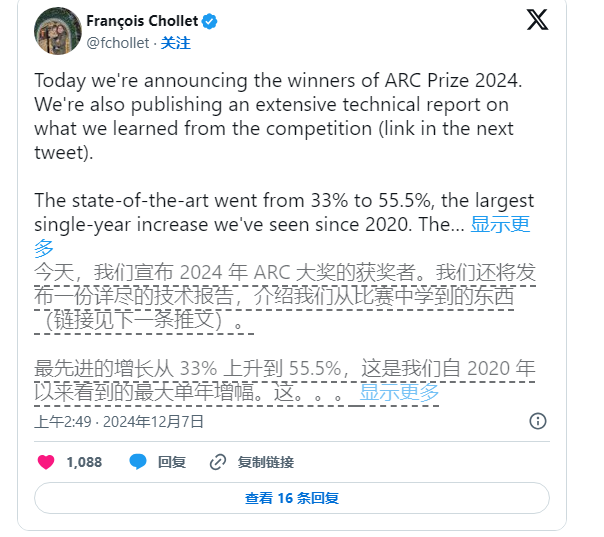

Nonetheless, Chollet has not stopped promoting AI research. He and Zapier founder Mike Knoop jointly launched a $1 million competition in June of this year to encourage open-source AI to challenge the ARC-AGI benchmark. Although the best-performing AI system out of 17,789 entries only scored 55.5%, below the 85% standard required to achieve "human-level" performance, Chollet and Knoop still view this as an important step forward.

Knoop noted in a blog post that this score does not mean we are closer to achieving AGI; rather, it highlights that some tasks in ARC-AGI overly rely on "brute force" solutions, which may not provide effective signals for true general intelligence. The original intention of ARC-AGI's design was to test AI's generalization capabilities by providing complex, unseen tasks, yet it remains uncertain whether these tasks can effectively assess AGI.

Image Source Note: Image generated by AI, image licensed from service provider Midjourney

The tasks in the ARC-AGI benchmark include challenges like puzzle problems that require AI to infer unknown answers based on known information. Although these tasks seem capable of pushing AI to adapt to new situations, results indicate that existing models appear to find solutions through extensive calculations rather than demonstrating true intelligent adaptability.

Additionally, the creators of ARC-AGI face criticism from peers, particularly regarding the ambiguity in defining AGI. A recent statement from an OpenAI employee suggested that if AGI is defined as "AI that performs better than most humans on most tasks," then AGI has already been achieved. However, Chollet and Knoop believe that the current design of the ARC-AGI benchmark has not fully met this goal.

Looking ahead, Chollet and Knoop plan to release a second generation of the ARC-AGI benchmark and hold a new competition in 2025 to address the shortcomings of the current test. They stated that the new benchmark will focus more on advancing AI research in more significant directions, accelerating the process towards achieving AGI.

However, fixing the existing benchmark is no easy task. The efforts of Chollet and Knoop demonstrate that defining intelligence in artificial intelligence, especially in the realm of general intelligence, remains a daunting and complex challenge.