Recently, researchers from Microsoft Research, in collaboration with the University of Washington, Stanford University, the University of Southern California, the University of California, Davis, and the University of California, San Francisco, launched LLaVA-Rad, a novel small multimodal model (SMM) aimed at enhancing the efficiency of generating clinical radiology reports. The introduction of this model not only marks a significant advancement in medical image processing technology but also opens up more possibilities for clinical applications in radiology.

In the biomedical field, research based on large foundational models has shown promising application prospects, especially with the development of multimodal generative AI that can simultaneously process text and images, thereby supporting tasks such as visual question answering and radiology report generation. However, there are still many challenges, such as the high resource demands of large models, making widespread deployment in clinical environments difficult. While small multimodal models have improved efficiency, they still exhibit significant performance gaps compared to larger models. Additionally, the lack of open-source models and reliable methods for assessing factual accuracy further restricts clinical applications.

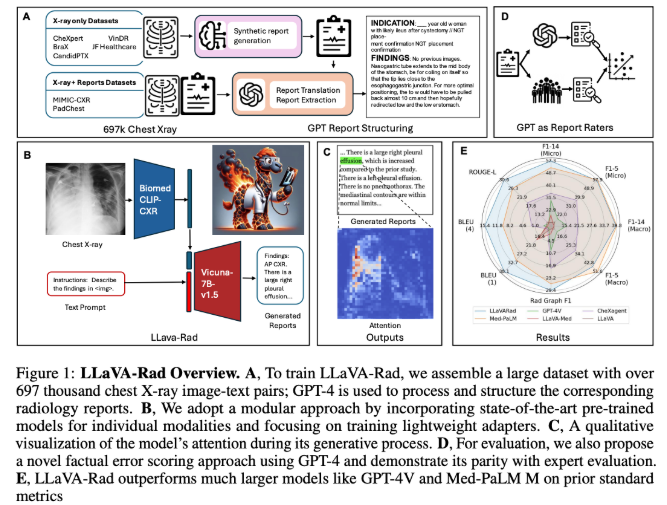

The LLaVA-Rad model was trained on a dataset of 697,435 pairs of radiology images and reports from seven different sources, focusing on chest X-ray (CXR) imaging, which is the most common type of medical imaging examination. The model was designed using a modular training approach, consisting of three stages: unimodal pre-training, alignment, and fine-tuning, utilizing an efficient adapter mechanism to embed non-text modalities into the text embedding space. Despite being smaller than some large models, such as Med-PaLM M, LLaVA-Rad performs exceptionally well, achieving improvements of 12.1% and 10.1% over other similar models in key metrics like ROUGE-L and F1-RadGraph.

Notably, LLaVA-Rad maintains superior performance across multiple datasets, even demonstrating stability in tests with unseen data. This success is attributed to its modular design and efficient data utilization architecture. Furthermore, the research team has introduced CheXprompt, an automatic scoring metric for assessing factual accuracy, addressing evaluation challenges in clinical applications.

The release of LLaVA-Rad undoubtedly represents a significant step forward in applying foundational models in clinical settings, providing a lightweight and efficient solution for generating radiology reports, and marking a further integration of technology with clinical needs.

Project link: https://github.com/microsoft/LLaVA-Med

Key Highlights:

🌟 LLaVA-Rad is a small multimodal model launched by the Microsoft research team, focusing on generating radiology reports.

💻 The model has been trained on 697,435 pairs of chest X-ray images and reports, achieving efficient and superior performance.

🔍 CheXprompt is an accompanying automatic scoring metric that helps solve evaluation challenges in clinical applications.