In the field of artificial intelligence, Alibaba's Tongyi Lab team recently announced the open-sourcing of its latest multimodal model, R1-Omni. This model incorporates reinforcement learning with verifiable rewards (RLVR), demonstrating exceptional capabilities in processing audio and video information. A key highlight of R1-Omni is its transparency, allowing for a clearer understanding of each modality's role in the decision-making process, particularly in tasks such as emotion recognition.

With the launch of DeepSeek R1, the application potential of reinforcement learning in large models is being continuously explored. The RLVR method offers new optimization strategies for multimodal tasks, effectively handling complex tasks such as geometric reasoning and visual counting. While current research largely focuses on the combination of images and text, Tongyi Lab's latest exploration expands this field by combining RLVR with a full-modality video model, showcasing the technology's broad application prospects.

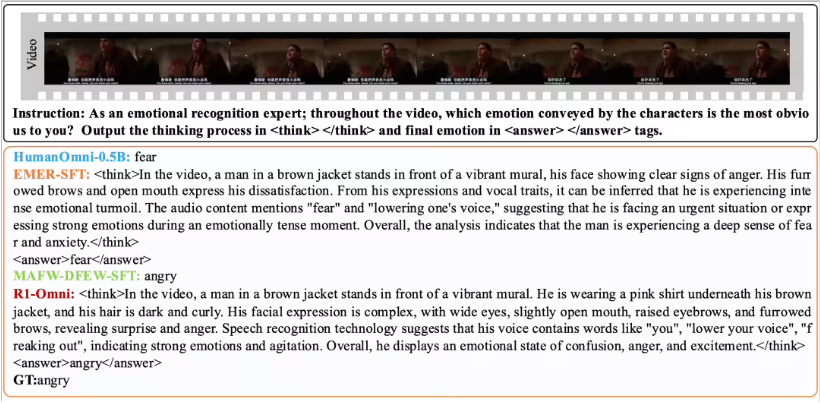

R1-Omni, through the RLVR method, makes the influence of audio and video information more intuitive. For example, in emotion recognition tasks, the model clearly shows which audio and video signals played a key role in emotion judgment. This transparency not only improves model reliability but also provides researchers and developers with better insights.

In terms of performance verification, the Tongyi Lab team conducted comparative experiments between R1-Omni and the original HumanOmni-0.5B model. The results show that R1-Omni achieved significant improvements on the DFEW and MAFW datasets, with an average increase of over 35%. Furthermore, compared to traditional supervised fine-tuning (SFT) models, R1-Omni also showed an improvement of over 10% in unsupervised learning (UAR). On different distribution test sets (such as RAVDESS), R1-Omni demonstrated excellent generalization ability, with WAR and UAR improvements exceeding 13%. These results not only demonstrate the advantages of RLVR in improving reasoning capabilities but also provide new ideas and directions for future multimodal model research.

The open-sourcing of R1-Omni will provide convenience for more researchers and developers. We expect this model to bring more innovation and breakthroughs in future applications.