Google CEO Sundar Pichai announced at a press conference that Google has open-sourced its latest multimodal large language model, Gemma-3. This model is attracting significant attention due to its low cost and high performance.

Gemma-3 offers four different parameter size options: 1 billion, 4 billion, 12 billion, and 27 billion parameters. Surprisingly, the largest 27-billion parameter model only requires a single H100 GPU for efficient inference, while similar models often require ten times the computing power. This makes Gemma-3 one of the most computationally efficient high-performance models currently available.

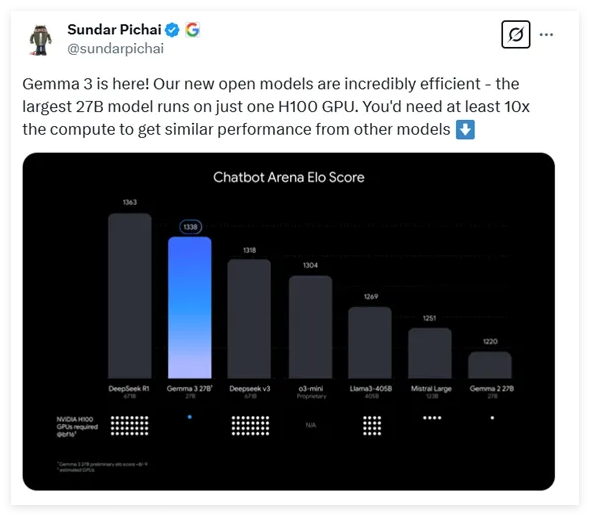

According to the latest benchmark data, Gemma-3 performs exceptionally well in various dialogue model comparisons, ranking second only to the well-known DeepSeek model and surpassing several popular models such as OpenAI's o3-mini and Llama3.

The architecture of the newly released Gemma-3 continues the general decoder Transformer design of its predecessors, but incorporates numerous innovations and optimizations. To address memory issues arising from long contexts, Gemma-3 employs an architecture that interleaves local and global self-attention layers, significantly reducing memory consumption.

In terms of context processing capabilities, Gemma-3 supports a context length of up to 128K tokens, providing better support for processing long texts. Furthermore, Gemma-3 possesses multimodal capabilities, enabling it to process both text and images simultaneously. It integrates a VisionTransformer-based visual encoder, effectively reducing the computational cost of image processing.

During training, Gemma-3 utilized a larger token budget, particularly the 27-billion parameter model which used 14 trillion tokens. Multilingual data was also incorporated to enhance the model's language processing capabilities, supporting 140 languages, with 35 directly usable.

Gemma-3 employs advanced knowledge distillation techniques and, in the later stages of training, utilizes reinforcement learning to optimize model performance, particularly in helpfulness, reasoning ability, and multilingual capabilities.

Evaluations show that Gemma-3 excels in multimodal tasks and its long text processing capabilities are impressive, achieving 66% accuracy. Additionally, Gemma-3's performance in dialogue capability assessments is top-tier, demonstrating its comprehensive strength across various tasks.

Link: https://huggingface.co/collections/google/gemma-3-release-67c6c6f89c4f76621268bb6d

Key Highlights:

🔍 Gemma-3 is Google's latest open-sourced multimodal large language model, with parameter ranges from 1 billion to 27 billion, and a 10x reduction in computing power requirements.

💡 The model utilizes an innovative architecture design, effectively handling long contexts and multimodal data, supporting simultaneous processing of text and images.

🌐 Gemma-3 supports 140 languages and, after training optimization, exhibits superior performance in various tasks, showcasing its powerful comprehensive capabilities.