Kunlun Wanwei proudly announces the official open-sourcing of its Skywork R1V multi-modal reasoning model! This marks not only the first open-sourced industrial multi-modal reasoning model in China but also a significant milestone in China's AI capabilities in multi-modal understanding and reasoning. Model weights and technical reports are now publicly available!

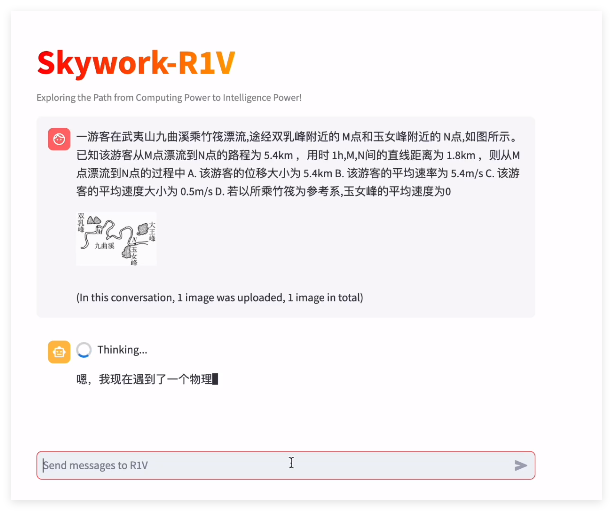

Imagine an AI model that can not only understand images but also perform logical reasoning like a human, solving complex visual problems—this is no longer a scene from a science fiction movie, but a capability Skywork R1V is realizing! This model acts like an "AI Sherlock Holmes," adept at unraveling complex situations through multi-step logical analysis, extracting deep meaning from vast amounts of visual information, and ultimately providing accurate answers. Whether deciphering visual logic puzzles, solving challenging visual math problems, analyzing scientific phenomena in images, or assisting in medical image diagnostic reasoning, Skywork R1V demonstrates remarkable capabilities.

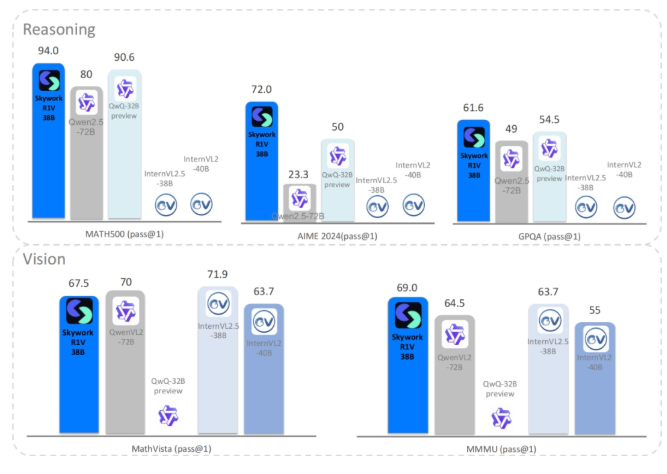

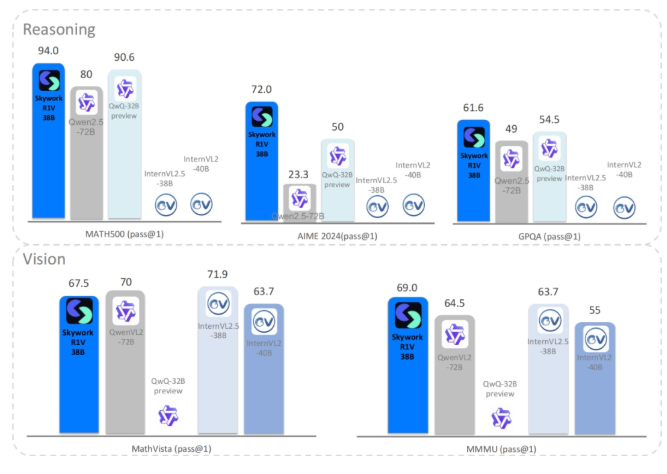

To gauge an AI model's "intelligence," data is the most convincing metric! In terms of reasoning ability, Skywork R1V achieved impressive scores of 94.0 and 72.0 on the authoritative MATH500 and AIME benchmark tests, respectively! This means Skywork R1V can easily handle complex mathematical problems and rigorous logical reasoning. Even more impressive is its successful integration of powerful reasoning capabilities into the visual domain, achieving scores of 69 and 67.5 on the MMMU and MathVista visual reasoning benchmark tests, respectively! This hard data directly demonstrates Skywork R1V's top-tier logical reasoning and mathematical analysis capabilities.

Kunlun Wanwei proudly highlights three key technological innovations behind the Skywork R1V model:

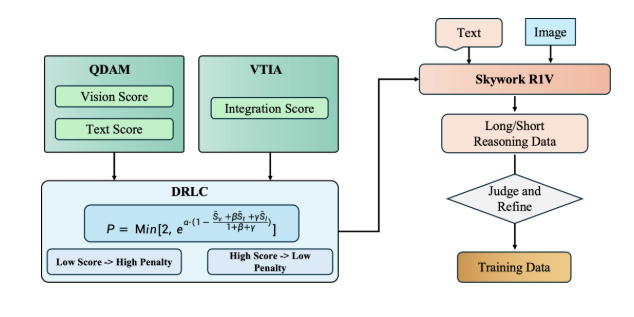

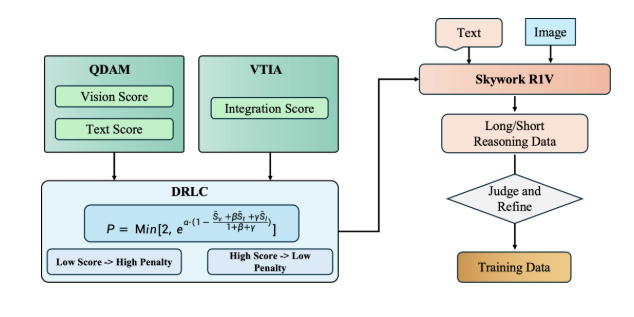

First is the highly efficient multi-modal transfer of text reasoning capabilities. The Kunlun Wanwei team ingeniously utilized Skywork-VL's visual projector, cleverly transferring the powerful text reasoning capabilities to visual tasks without the massive expense of retraining language models and visual encoders. This is akin to a "seamless transfer," perfectly migrating capabilities without affecting its original text reasoning power!

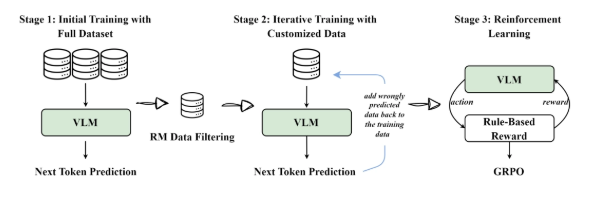

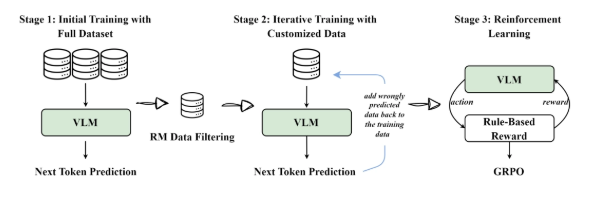

Second is the multi-modal hybrid training (Iterative SFT+GRPO). This training method is like feeding the model a "mixed nutrient diet." Through the clever combination of iterative supervised fine-tuning and GRPO reinforcement learning, it strategically aligns visual-text representations in phases, ultimately achieving efficient cross-modal fusion, significantly boosting the model's cross-modal capabilities! In MMMU and MathVista benchmark tests, Skywork R1V's performance rivals that of larger, closed-source models!

Finally, there's the adaptive length chain-of-thought distillation. The Kunlun Wanwei team innovatively introduced an "intelligent braking" mechanism. The model can adaptively adjust the length of the reasoning chain based on the complexity of the visual-text input, avoiding "overthinking." This ensures reasoning accuracy while significantly improving efficiency! Coupled with a multi-stage self-distillation strategy, the model's data generation and reasoning quality are further enhanced, making it more adept at handling complex multi-modal tasks!

The open-sourcing of Skywork R1V will undoubtedly provide a powerful multi-modal reasoning "tool" for AI researchers and developers in China and globally. Its emergence will not only accelerate innovation and application of multi-modal AI technologies but also promote deeper integration of AI technologies across various industries, ushering in a more intelligent and brighter future!