The Jieyue Xingchen Technology team proudly announces the official launch of its groundbreaking new multi-modal reasoning model, Step-R1-V-Mini. This release marks a significant advancement in multi-modal collaborative reasoning, injecting new vitality into the further development of AI technology. Step-R1-V-Mini supports image and text input with text output, exhibiting excellent instruction-following capabilities and versatility. It can accurately perceive images and complete complex reasoning tasks.

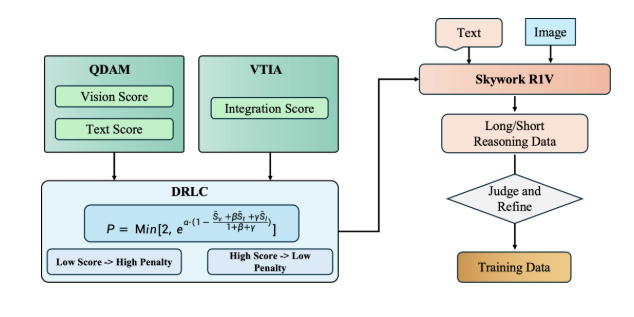

Step-R1-V-Mini's training methodology incorporates innovative techniques, employing multi-modal joint reinforcement learning based on the Proximal Policy Optimization (PPO) reinforcement learning strategy. A verifiable reward mechanism has been introduced into the image space. This mechanism effectively addresses the complexities of image-space reasoning chains, mitigating errors in correlation and causal reasoning often caused by confusion. Compared to methods like Direct Preference Optimization (DPO), Step-R1-V-Mini demonstrates superior generalization and robustness when handling complex image-space chains.

Furthermore, to fully leverage multi-modal synthetic data, Jieyue Xingchen designed numerous multi-modal data synthesis chains based on environmental feedback, generating scalable multi-modal reasoning data for training. PPO-based reinforcement learning training simultaneously enhances the model's text and visual reasoning capabilities, effectively avoiding the "seesaw" problem often encountered during training.

Step-R1-V-Mini has achieved remarkable results in visual reasoning. It has performed exceptionally well on several public leaderboards, notably achieving the top domestic ranking on the MathVision visual reasoning leaderboard. This demonstrates the model's superior performance in visual reasoning, mathematical logic, and code.

Real-world application examples showcase Step-R1-V-Mini's powerful capabilities. For instance, in the "Identify Location from Image" scenario, given a user-submitted photo of Wembley Stadium, Step-R1-V-Mini quickly identifies elements within the image. By combining different elements such as colors and objects (stadium, Manchester City logo), it accurately infers the location as Wembley Stadium and even suggests potential competing teams. In the "Identify Recipe from Image" scenario, given a food image, Step-R1-V-Mini accurately identifies the dish and its condiments, listing specific quantities such as "300g fresh shrimp, 2 scallions" etc. In the "Object Count" scenario, given an image of objects with varying shapes, colors, and positions, Step-R1-V-Mini identifies each object individually, reasons based on color, shape, and position, and accurately determines the remaining number of objects.

The release of Step-R1-V-Mini brings new hope to the field of multi-modal reasoning. The model is now officially available on the Jieyue AI web portal and an API is provided on the Jieyue Xingchen open platform for developers and researchers to experience and use. Jieyue Xingchen states that Step-R1-V-Mini represents a milestone achievement in their multi-modal reasoning efforts, and they will continue exploring advancements in reasoning models to further propel the development of AI technology.

Jieyue AI Web Portal:

https://yuewen.cn/chats/new

Jieyue Xingchen Open Platform:

https://platform.stepfun.com/docs/llm/reasoning