In a recent study, Google collaborated with Carnegie Mellon University and the MultiOn team to investigate the impact of synthetic data on training large language models (LLMs). They found that synthetic data significantly improved the logical reasoning capabilities of LLMs, particularly in solving mathematical problems, resulting in an astounding eightfold increase in performance. This discovery holds significant implications given the current scarcity of training data.

Currently, approximately 300 trillion high-quality text data points are available globally. However, with the increasing popularity of models like ChatGPT, the demand for training data is rapidly escalating, projected to outstrip supply by 2026. Against this backdrop, synthetic data emerges as a crucial alternative.

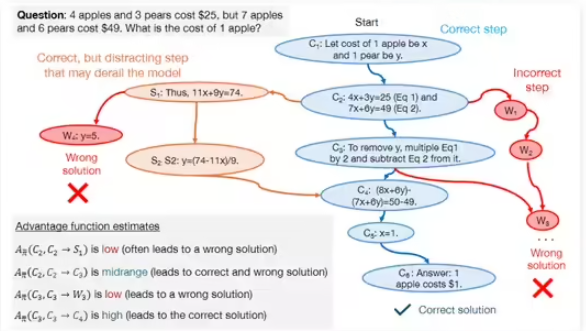

The research team primarily explored two types of synthetic data: positive and negative data. Positive data consists of correct problem solutions generated by high-performance models like GPT-4 and Gemini 1.5 Pro, providing examples for other models to learn from. However, relying solely on positive data has limitations. Models might learn through pattern matching without truly understanding the problem-solving process, hindering their generalization ability.

To overcome these limitations, the team introduced negative data—incorrect problem-solving steps. This data helps models identify common errors, thereby enhancing their logical reasoning. While using negative data presents challenges due to potentially misleading information, the researchers employed Direct Preference Optimization (DPO) to enable effective learning from mistakes, clarifying the importance of each step in the problem-solving process.

The study utilized models such as DeepSeek-Math-7B and LLaMa2-7B, conducting extensive testing on the GSM8K and MATH datasets. Results showed that LLMs pre-trained with both positive and negative synthetic data exhibited an eightfold improvement in mathematical reasoning tasks. This research not only demonstrates the immense potential of synthetic data in boosting the logical reasoning capabilities of LLMs but also offers new avenues for future model training.