AI technology company Sync Labs recently announced the launch of its latest product, Lipsync-2, via Twitter. This model is hailed as the "world's first zero-shot lip-sync model," capable of preserving the speaker's unique style without requiring additional training or fine-tuning. This breakthrough technology significantly improves realism, expressiveness, control, quality, and speed, making it suitable for real-person videos, animation, and AI-generated content.

Innovative Features of Lipsync-2

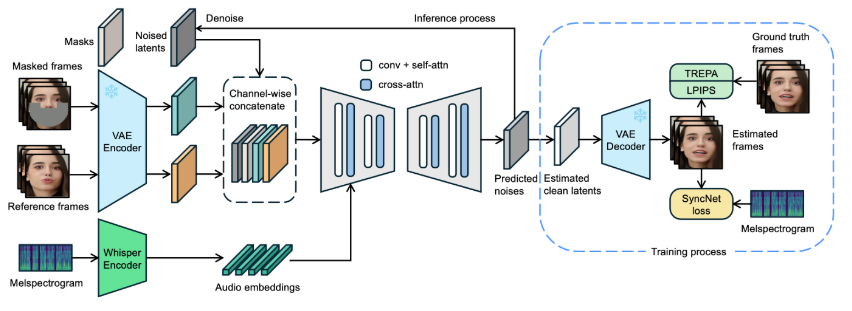

According to Sync Labs' April 1st Twitter post, the core highlight of Lipsync-2 is its "zero-shot" capability. This means the model can instantly learn and generate lip-sync effects that match a speaker's unique style without pre-training on that specific speaker. This feature revolutionizes traditional lip-sync technology, which typically requires massive training datasets, allowing content creators to use the technology more efficiently.

Furthermore, Sync Labs revealed that Lipsync-2 represents a technological leap across multiple dimensions. Whether it's real-person videos, animated characters, or AI-generated figures, Lipsync-2 delivers enhanced realism and expressiveness.

New Control Feature: Temperature Parameter

In addition to its zero-shot capability, Lipsync-2 introduces a control feature called "temperature." This parameter allows users to adjust the intensity of the lip-sync effect, ranging from a natural and subtle synchronization to a more exaggerated and expressive result, catering to various needs. Currently, this feature is in private testing and is gradually being rolled out to paying users.

Application Prospects: Multilingual Education and Content Creation

Sync Labs' April 3rd Twitter post further showcased Lipsync-2's potential applications, highlighting its "outstanding accuracy, style, and expressiveness" and envisioning a future where "every lecture can be presented in every language." This technology can be used not only for video translation and sub-character editing but also to facilitate character re-animation and even support realistic AI-generated user content (UGC), revolutionizing education, entertainment, and marketing.

Industry Response and Future Expectations

The release of Lipsync-2 has quickly garnered industry attention. Sync Labs stated that the model is available for testing on the fal platform, accessible through fal's model library. Since its April 1st announcement, discussions about Lipsync-2 on Twitter have steadily increased, with many users expressing anticipation for its cross-domain application potential.

As a pioneer in AI video technology, Sync Labs has once again demonstrated its leadership in innovation with Lipsync-2. With the gradual rollout of this technology, the barrier to content creation may be further lowered, while audiences will enjoy a more natural and immersive audio-visual experience.