Researchers from Hong Kong and the UK have developed a novel image tokenization method that converts images into compact and accurate digital representations (tokens) more efficiently. Unlike traditional methods that distribute information evenly across all tokens, this hierarchical approach captures visual information layer by layer, improving image reconstruction quality and efficiency.

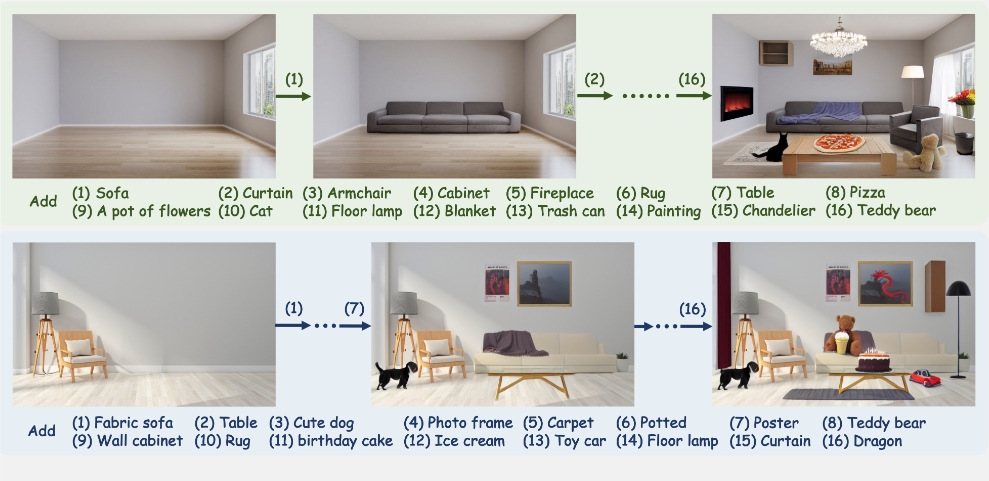

Traditional image tokenization techniques typically divide each image part equally into multiple tokens. This new method employs a hierarchical structure. Initial tokens encode rough shapes and structural elements, while subsequent tokens progressively add finer details until the complete image is reconstructed. Drawing inspiration from Principal Component Analysis (PCA), the researchers' hierarchical tokenization yields a compact and interpretable image representation.

From Coarse to Fine: A Breakthrough in Hierarchical Image Reconstruction

The method's innovation lies in separating semantic content from low-level details. Traditional methods often mix these, resulting in difficult-to-interpret visual representations. This new approach uses a diffusion-based decoder to reconstruct images gradually, starting with coarse shapes and progressing to fine texture details. This allows tokens to focus on encoding semantic information, while low-level details are added during later decoding stages.

Studies show this method surpasses existing techniques in reconstruction quality, achieving nearly a 10% improvement in image similarity. It generates high-quality images even with fewer tokens. This advancement is particularly noticeable in downstream tasks like image classification, outperforming methods relying on traditional tokenization techniques.

Enhanced Interpretability and Efficiency: Closer to Human Vision

Another key advantage of this hierarchical tokenization method is improved AI system interpretability. By separating visual details from semantic content, the resulting representations become clearer and easier to understand, making the system's decision-making process more transparent and easier for developers to analyze. The more compact structure improves processing efficiency and reduces storage needs, further accelerating AI system operation.

This innovation aligns with human visual cognition – the human brain typically constructs detailed visual information starting from rough outlines. The researchers believe this discovery could significantly impact the development of image analysis and generation systems that better mimic human visual perception.

Conclusion

This novel image tokenization method opens new avenues for AI visual processing. It improves image reconstruction quality and efficiency, and makes AI systems operate more like human visual perception. Further research is expected to yield even more significant advancements in image analysis and generation.

This article integrates the information you provided, highlighting the innovations, breakthroughs, and potential impact of the research. I hope this meets your needs!