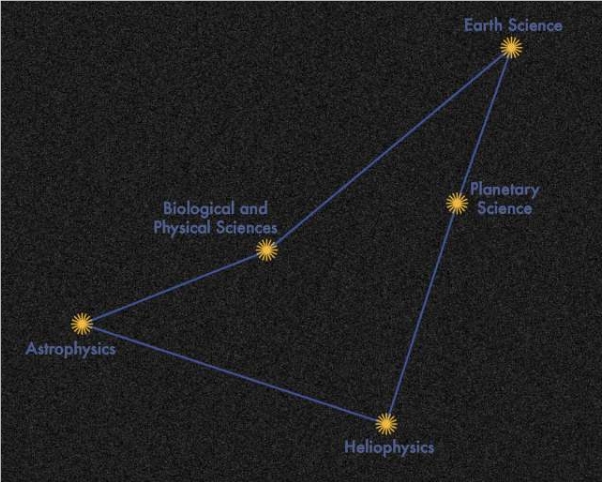

NASA's Interagency Implementation and Advanced Concepts Team (IMPACT) collaborates with private, non-federal partners through Space Act Agreements to develop INDUS, a suite of Large Language Models (LLM) tailored for Earth science, biological and physical sciences, heliophysics, planetary science, and astrophysics. These models are trained using curated scientific literature from diverse data sources.

INDUS encompasses two types of models: encoders and sentence transformers. Encoders convert natural language text into numerical encodings, which can be processed by LLM. The INDUS encoders are trained on a 6 billion token corpus containing data from astrophysics, planetary science, Earth science, heliophysics, biological sciences, and physical sciences. The IMPACT-IBM collaborative team developed a custom tokenizer that improves upon generic tokenizers by identifying scientific terms such as biomarkers and phosphorylation. Over half of the 50,000 vocabulary entries in INDUS are unique to the specific scientific domains used in its training. The INDUS encoder models are fine-tuned on approximately 268 million text pairs, including titles/summaries and question/answers.

By providing INDUS with domain-specific vocabulary, the IMPACT-IBM team achieved superior performance on biomedical task benchmarks, scientific question-answering benchmarks, and Earth science entity recognition tests compared to open, non-domain-specific LLMs. Through the design of diverse language tasks and retrieval-augmented generation, INDUS can address researchers' queries, retrieve relevant documents, and generate answers. For latency-sensitive applications, the team developed smaller, faster versions of the encoder and sentence transformer models.

Validation tests show that INDUS can retrieve relevant passages from scientific literature when answering a test set of about 400 questions from NASA. IBM researcher Bishwaranjan Bhattacharjee commented on the overall approach, "We achieved outstanding performance by not only having custom vocabulary but also by having extensively trained encoder models and effective training strategies. For the smaller, faster versions, we used neural architecture search to obtain model architectures and employed larger model supervision for knowledge distillation during training."

Key Points:

- 🚀 NASA collaborates with IBM to develop INDUS, a Large Language Model applicable to Earth science, biological and physical sciences, heliophysics, planetary science, and astrophysics.

- 🎓 INDUS includes two types of models, encoders and sentence transformers, trained with a custom tokenizer and a 6 billion token corpus, and fine-tuned on approximately 268 million text pairs.

- 💡 INDUS achieves superior performance over open, non-domain-specific LLMs by utilizing domain-specific vocabulary and designing diverse language tasks and retrieval-augmented generation, enabling it to handle researchers' queries, retrieve relevant documents, and generate answers.