Generative Rendering: 2D Mesh

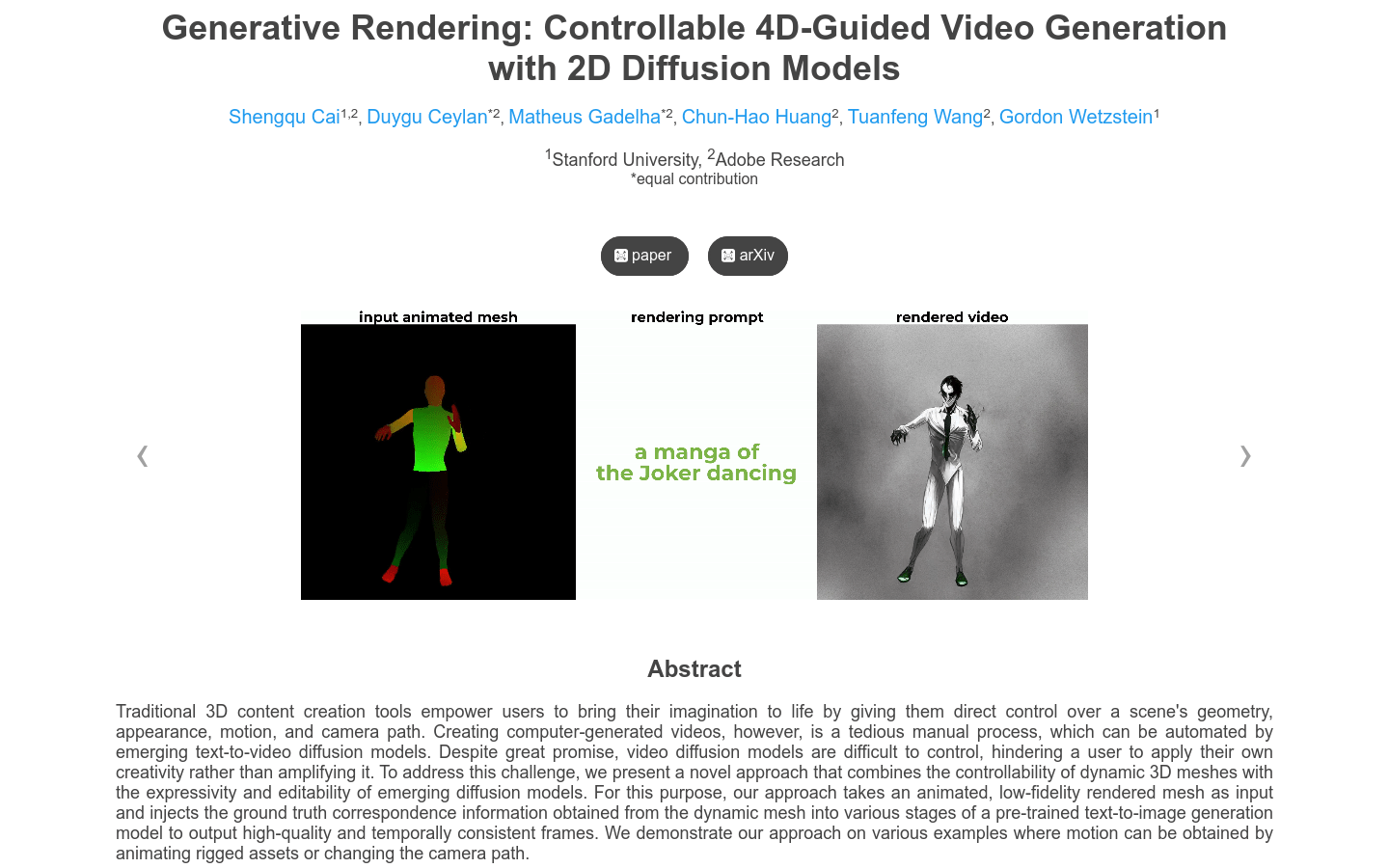

Control video generation model

Generative Rendering: 2D Mesh Visit Over Time

Monthly Visits

No Data

Bounce Rate

No Data

Page per Visit

No Data

Visit Duration

No Data

Generative Rendering: 2D Mesh Visit Trend

No Visits Data

Generative Rendering: 2D Mesh Visit Geography

No Geography Data

Generative Rendering: 2D Mesh Traffic Sources

No Traffic Sources Data