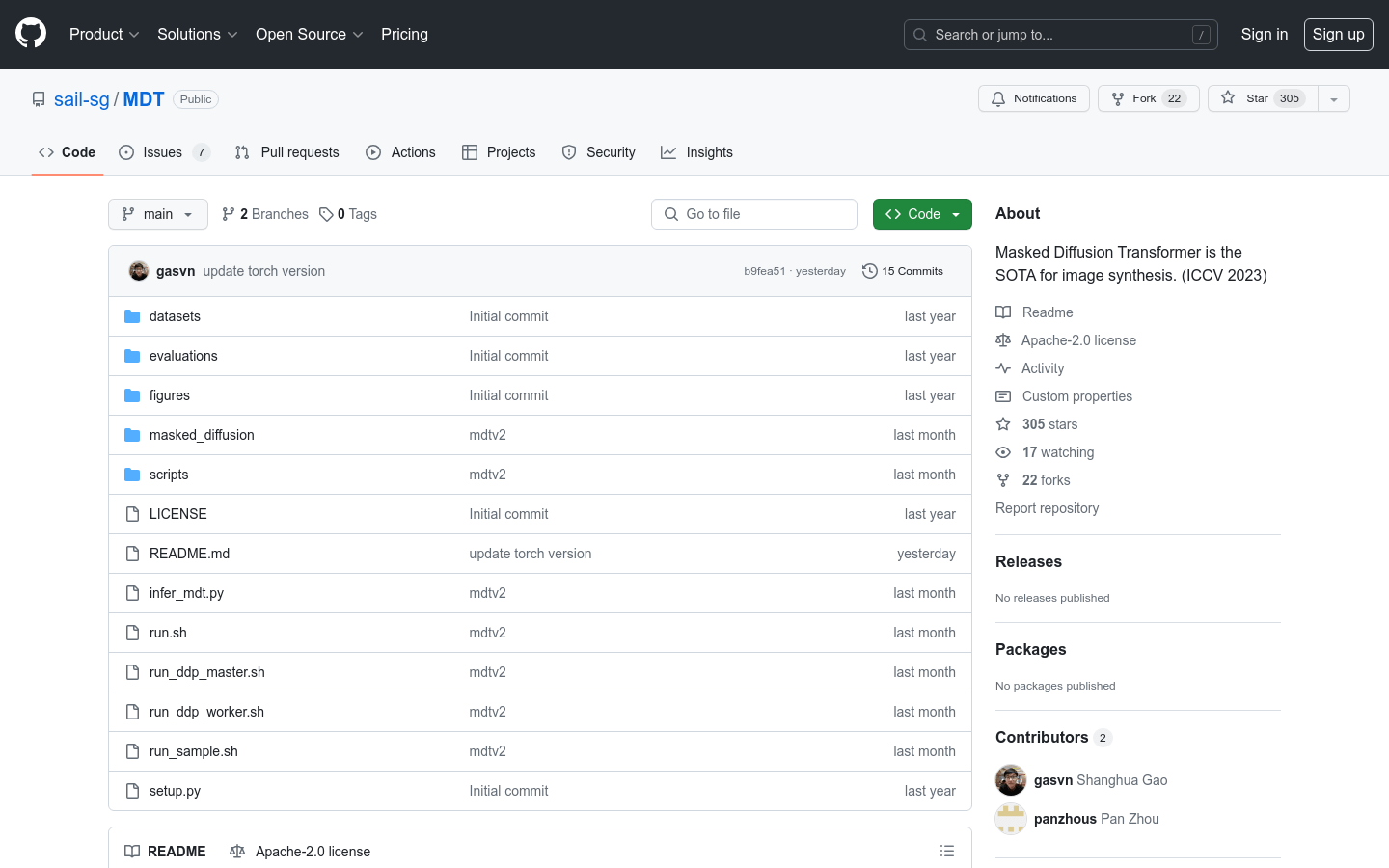

Masked Diffusion Transformer (MDT)

Masked Diffusion Transformer is the latest technology in image synthesis, achieving SOTA (State of the Art) at ICCV 2023.

Masked Diffusion Transformer (MDT) Visit Over Time

Monthly Visits

521149929

Bounce Rate

35.96%

Page per Visit

6.1

Visit Duration

00:06:29