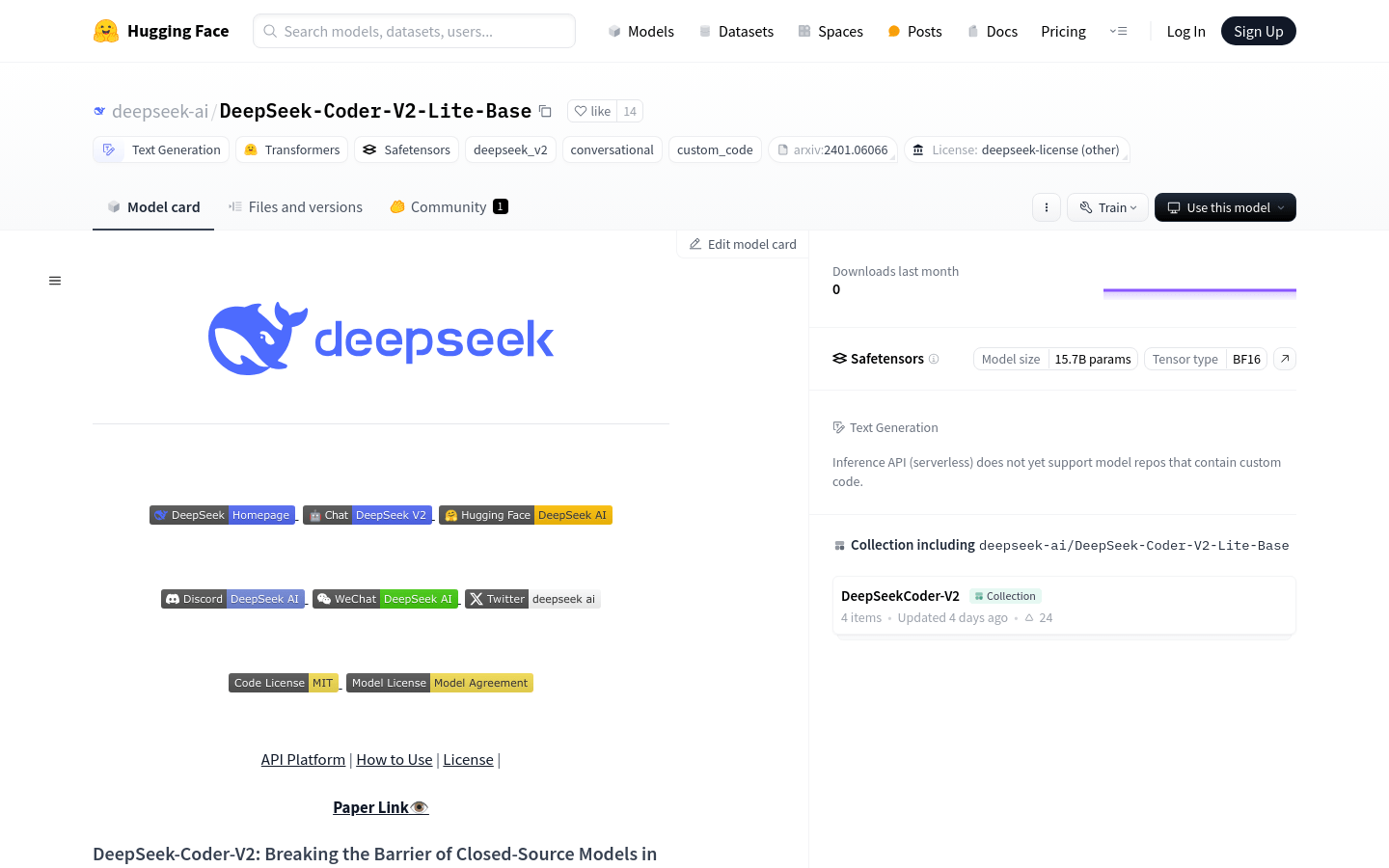

DeepSeek-Coder-V2-Lite-Base

An open-source code language model designed to enhance programming and mathematical reasoning abilities.

DeepSeek-Coder-V2-Lite-Base Visit Over Time

Monthly Visits

25633376

Bounce Rate

44.05%

Page per Visit

5.8

Visit Duration

00:04:53