In this era where AI technology is advancing at breakneck speed, we have witnessed numerous magical innovations, such as the recently viral "Diffree" on social media. This is not a newly launched mobile game, but an AI image processing technology that has designers and photographers cheering.

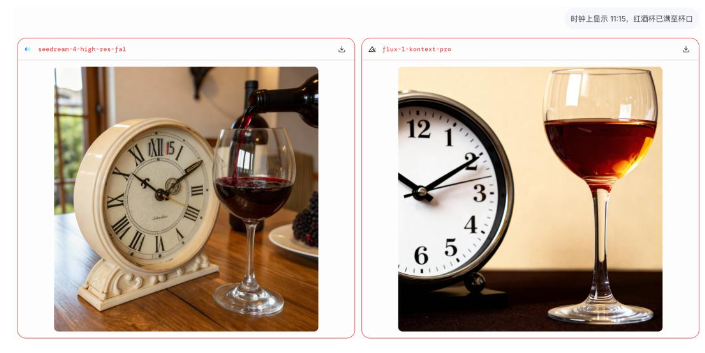

It can add new objects to an image "seamlessly" based on your text description, as if it possesses an "invisible cloak." You don't need to manually add masks or templates; the model can automatically predict the position and shape of the object, achieving seamless integration of the new object.

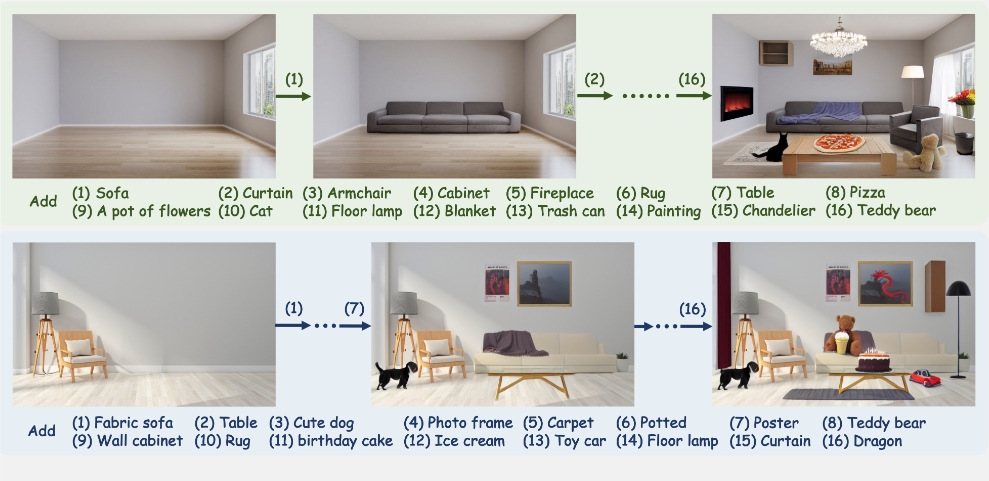

Imagine you are an interior designer who needs to show clients the effects of different decorations. Previously, you might have needed to manually edit photos or retake them, but now, you only need to tell Diffree your requirements, and it can add new decorations to the photo "without anyone knowing," and it looks completely natural.

The core of this technology lies in its "text-guided" function. You only need to input a simple text description, such as "place a pillow on the sofa," and Diffree will understand your needs and "create" a pillow on the sofa. Moreover, the lighting, tone, and color can be consistent with the original image.

So, how does Diffree achieve this? This is thanks to its underlying "text-to-image" (T2I) model. This model, through training, has learned how to generate image content based on text descriptions. Diffree utilizes a diffusion model called "Stable Diffusion" and an additional mask prediction module to predict the ideal position of the new object.

To enable Diffree to better understand the real world, researchers created a synthetic dataset called "OABench." This dataset includes 74K real-world images and text pairs, which are used to train Diffree to accurately add objects to images while maintaining background consistency.

The superpowers of Diffree don't stop there. It can not only add single objects to an image but also add multiple different objects in the same image, with each addition maintaining background consistency. This is like playing an advanced version of the "Spot the Difference" game, but this time, AI can skillfully add new elements without changing the background.

Researchers have proven the superiority of Diffree through a series of experiments. Whether in terms of success rate, object rationality, quality, diversity, or relevance, Diffree outperforms other text-guided and mask-guided technologies.

The advent of Diffree is not only a leap in technology but also a boon for designers, photographers, and even ordinary users. It lowers the threshold for image editing, allowing everyone to become a creator. The future Diffree may also be combined with other AI technologies to open up more amazing application scenarios.

Project address: https://opengvlab.github.io/Diffree/