Multimodal generative models are at the forefront of the latest trends in artificial intelligence, dedicated to integrating visual and textual data to create systems capable of handling a variety of tasks. These tasks range from generating high-detail images from textual descriptions to understanding and reasoning across different data types, driving the emergence of more interactive and intelligent AI systems that seamlessly combine vision and language.

A key challenge in this field is developing autoregressive (AR) models that can generate realistic images based on textual descriptions. While diffusion models have made significant strides in this area, AR models have lagged behind, particularly in terms of image quality, resolution flexibility, and the ability to handle various visual tasks. This gap has prompted researchers to seek innovative methods to enhance the capabilities of AR models.

Currently, the field of text-to-image generation is largely dominated by diffusion models, which excel in producing high-quality, visually appealing images. However, AR models like LlamaGen and Parti struggle in this regard. They often rely on complex encoder-decoder architectures and typically can only generate images at fixed resolutions. This limitation significantly reduces their flexibility and effectiveness in producing diverse, high-resolution outputs.

To break this bottleneck, researchers from the Shanghai AI Lab and the Chinese University of Hong Kong have introduced Lumina-mGPT, an advanced AR model designed to overcome these limitations. Lumina-mGPT is based on a decoder-only transformer architecture and employs a multimodal generative pre-training (mGPT) approach. The model integrates visual and language tasks into a unified framework, aiming to achieve photorealistic image generation on par with diffusion models while maintaining the simplicity and scalability of AR methods.

Lumina-mGPT employs an exhaustive approach to enhance image generation capabilities, with a core strategy of flexible progressive supervised fine-tuning (FP-SFT). This strategy trains the model to generate high-resolution images gradually, starting with learning general visual concepts at lower resolutions and then introducing more complex high-resolution details. Additionally, the model introduces an innovative explicit image representation system, which eliminates ambiguities related to variable image resolutions and aspect ratios by incorporating specific height and width indicators and line-end markers.

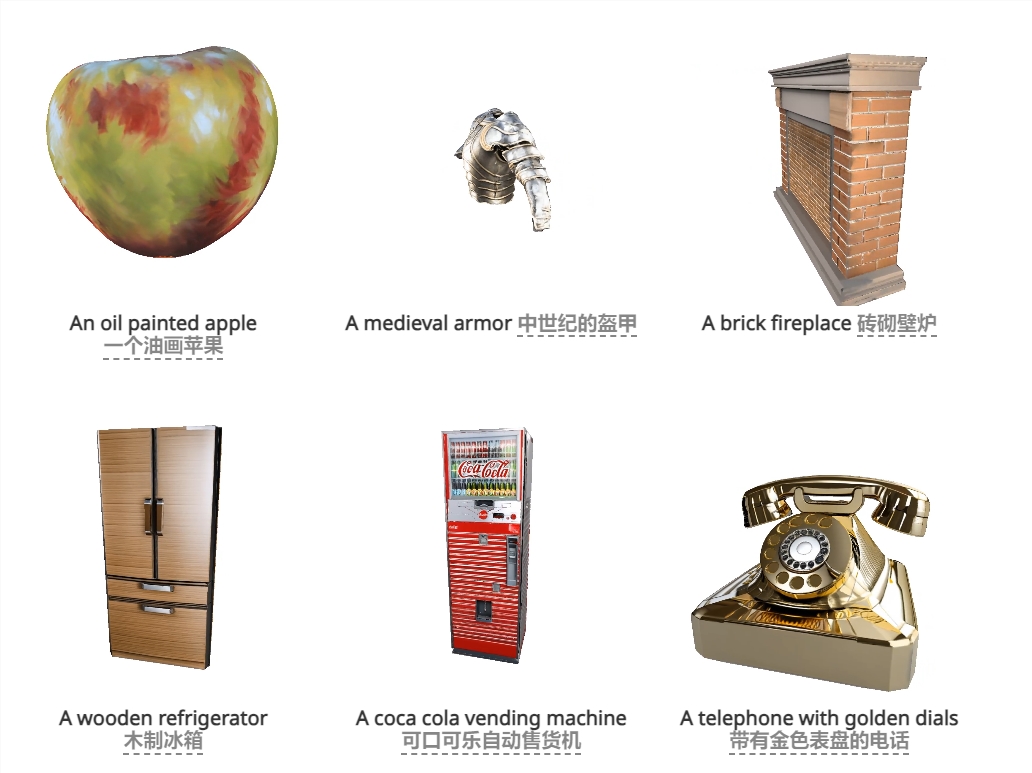

In terms of performance, Lumina-mGPT significantly surpasses previous AR models in generating photorealistic images. It can produce high-resolution images of 1024x1024 pixels with rich details that are highly consistent with the provided text prompts. Researchers report that Lumina-mGPT requires only 10 million image-text pairs for training, far fewer than the 50 million pairs needed for LlamaGen. Despite the smaller dataset, Lumina-mGPT still outperforms competitors in image quality and visual consistency. Furthermore, the model supports various tasks such as visual question answering, dense captioning, and controllable image generation, showcasing its flexibility as a multimodal generalist.

Its flexible and scalable architecture further enhances Lumina-mGPT's ability to generate diverse, high-quality images. The model employs advanced decoding techniques, such as classifier-free guidance (CFG), which play a crucial role in improving the quality of generated images. For example, by adjusting parameters like temperature and top-k values, Lumina-mGPT can control the detail and diversity of generated images, helping to reduce visual artifacts and enhance overall aesthetics.

Lumina-mGPT marks a significant advancement in the field of autoregressive image generation. This model, developed by researchers from the Shanghai AI Lab and the Chinese University of Hong Kong, successfully bridges the gap between AR models and diffusion models, providing a powerful new tool for generating photorealistic images from text. Its innovative approaches in multimodal pre-training and flexible fine-tuning demonstrate the transformative potential of AR models, foreshadowing the emergence of more complex and versatile AI systems in the future.

Project link: https://top.aibase.com/tool/lumina-mgpt

Online demo: https://106.14.2.150:10020/