According to the official WeChat account of the Doubao large model team, the "VideoWorld" video generation experimental model proposed by the Doubao team has recently been officially open-sourced following joint research by Beijing Jiaotong University and the University of Science and Technology of China.

The standout feature of this model is that it no longer relies on traditional language models but can recognize and understand the world solely through visual information. This groundbreaking research was inspired by Professor Fei-Fei Li's concept mentioned in her TED talk that "children can understand the real world without relying on language."

"VideoWorld" achieves complex reasoning, planning, and decision-making abilities by analyzing and processing a large amount of video data. Experiments conducted by the research team show that the model has achieved significant results with only 300M parameters. Unlike existing models that rely on language or labeled data, VideoWorld can learn knowledge independently, particularly providing a more intuitive learning approach in complex tasks like origami and tying ties.

To validate the effectiveness of this model, the research team established two experimental environments: Go game matches and robot simulation control. Go, being a highly strategic game, can effectively assess the model's rule learning and reasoning capabilities, while the robot tasks evaluate the model's performance in control and planning. During the training phase, the model gradually builds its ability to predict future scenes by watching a large number of video demonstration data.

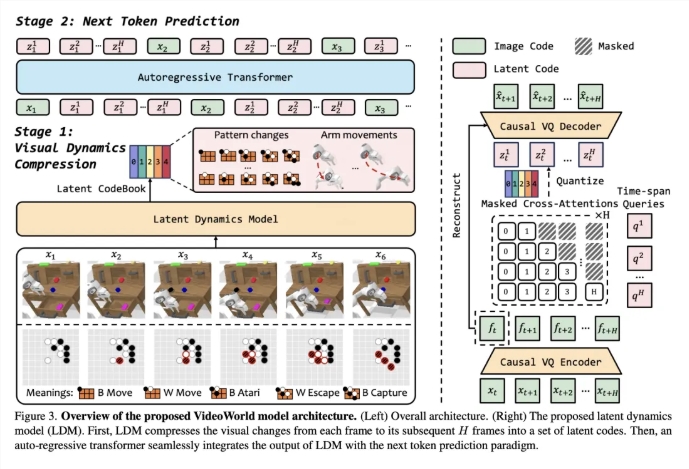

To improve the efficiency of video learning, the team introduced a Latent Dynamic Model (LDM) aimed at compressing the visual changes between video frames to extract key information. This method not only reduces redundant information but also enhances the model's learning efficiency for complex knowledge. Through this innovation, VideoWorld has demonstrated outstanding capabilities in Go and robot tasks, even reaching the level of a professional 5-dan Go player.

VideoWorld: Exploring Knowledge Learning from Unlabeled Videos

Paper Link:https://arxiv.org/abs/2501.09781

Code Link:https://github.com/bytedance/VideoWorld

Project Homepage:https://maverickren.github.io/VideoWorld.github.io

Key Highlights:

🌟 The "VideoWorld" model can achieve knowledge learning solely through visual information, without relying on language models.

🤖 The model exhibits exceptional reasoning and planning abilities in Go and robot simulation tasks.

🔓 The project code and model have been open-sourced, and we welcome participation and communication from all sectors.