In the field of artificial intelligence, the DeepSeek team recently announced their latest research成果, introducing an innovative sparse attention mechanism called NSA (Native Sparse Attention). The core objective of this technology is to enhance the speed of long-context training and inference, particularly optimized for modern hardware, resulting in a significant improvement in training and inference efficiency.

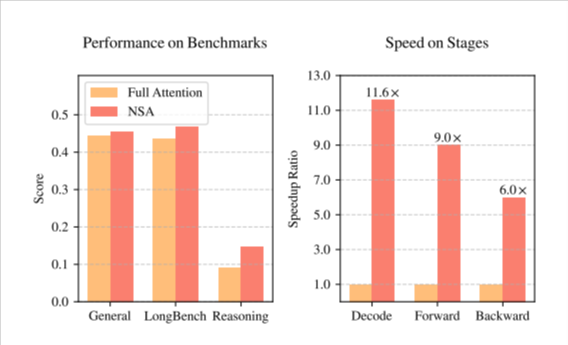

The launch of NSA technology has brought remarkable changes to the training of artificial intelligence models. Firstly, it significantly improves inference speed and effectively reduces the cost of pre-training through a series of design optimizations tailored to modern computing hardware. More importantly, while enhancing speed and reducing costs, NSA still maintains a high level of model performance, ensuring that the model's performance across various tasks remains unaffected.

The DeepSeek team employed a hierarchical sparse strategy in their research, dividing the attention mechanism into three branches: compression, selection, and sliding window. This design allows the model to capture both global context and local details simultaneously, thereby improving the model's ability to handle long texts. Additionally, optimizations in memory access and computation scheduling with NSA significantly reduce the computational latency and resource consumption associated with long-context training.

In a series of general benchmark tests, NSA demonstrated its excellent performance. Particularly in long-context tasks and instruction-based inference, NSA's performance is comparable to that of fully attention models, and in some cases, it even outperforms them. The release of this technology marks another leap forward in AI training and inference technology, bringing new momentum to the future development of artificial intelligence.

NSA paper (https://arxiv.org/pdf/2502.11089v1).

Key Points:

🌟 The launch of NSA technology significantly enhances the speed of long-context training and inference while reducing pre-training costs.

🛠️ A hierarchical sparse strategy divides the attention mechanism into compression, selection, and sliding window, enhancing the model's ability to handle long texts.

📈 NSA performs excellently in multiple benchmark tests, surpassing traditional fully attention models in some cases.