Recently, a research team from Microsoft, in collaboration with researchers from several universities, released a multimodal AI model named “Magma.” This model is designed to handle and integrate various types of data, including images, text, and videos, to perform complex tasks in both digital and physical environments. As technology continues to advance, multimodal AI agents are being widely applied in fields such as robotics, virtual assistants, and user interface automation.

Previous AI systems typically focused on either visual-language understanding or robotic operations, making it difficult to combine these two capabilities into a unified model. Many existing models perform well in specific areas but have poor generalization capabilities across different application scenarios. For instance, the Pix2Act and WebGUM models excel in UI navigation, while OpenVLA and RT-2 are better suited for robotic manipulation, yet they often require separate training and struggle to bridge the gap between digital and physical environments.

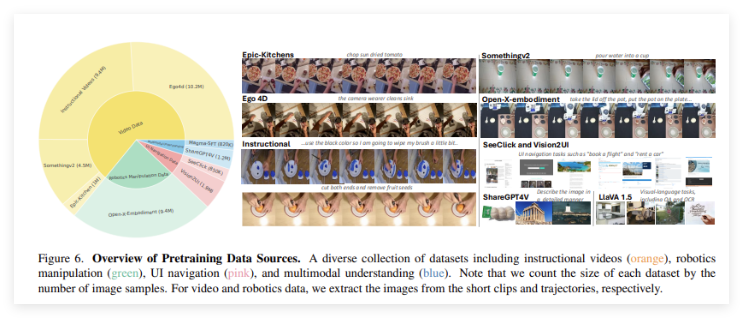

The launch of the “Magma” model aims to overcome these limitations. By introducing a powerful training methodology, it integrates multimodal understanding, action localization, and planning capabilities, enabling AI agents to operate seamlessly in various environments. The training dataset for Magma includes 39 million samples, comprising images, videos, and robotic action trajectories. Additionally, the model employs two innovative techniques: “Set-of-Mark” (SoM) and “Trace-of-Mark” (ToM). The former allows the model to identify operable visual objects in the UI environment, while the latter enables it to track the movement of objects over time, enhancing its future action planning capabilities.

The “Magma” model utilizes advanced deep learning architectures and large-scale pre-training techniques to optimize its performance across multiple domains. The model uses the ConvNeXt-XXL visual backbone for processing images and videos, while the LLaMA-3-8B language model handles text input. This architecture allows “Magma” to efficiently integrate visual, language, and action execution. After comprehensive training, the model has achieved outstanding results on multiple tasks, demonstrating strong multimodal understanding and spatial reasoning abilities.

Project link: https://microsoft.github.io/Magma/

Key Highlights:

🌟 The Magma model has been trained on over 39 million samples, showcasing its powerful multimodal learning capabilities.

🤖 This model successfully integrates vision, language, and action, overcoming the limitations of existing AI models.

📈 Magma has excelled in multiple benchmark tests, demonstrating strong generalization capabilities and excellent decision-making execution.