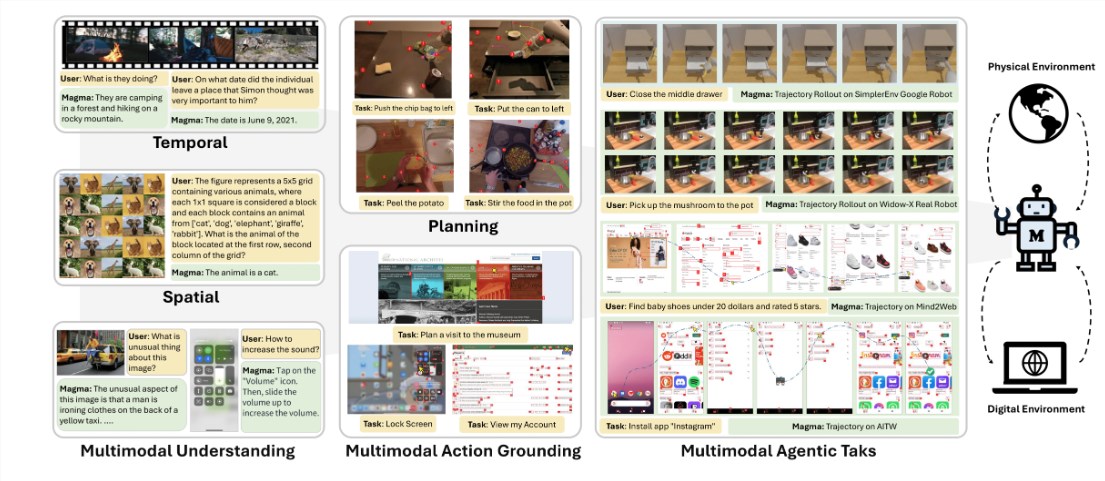

Microsoft has officially released and open-sourced its multi-modal AI Agent foundation model, "Magma," on its website. This emerging technology showcases significantly enhanced multi-modal capabilities compared to traditional smart assistants, handling images, videos, and text, bridging the gap between the digital and physical worlds.

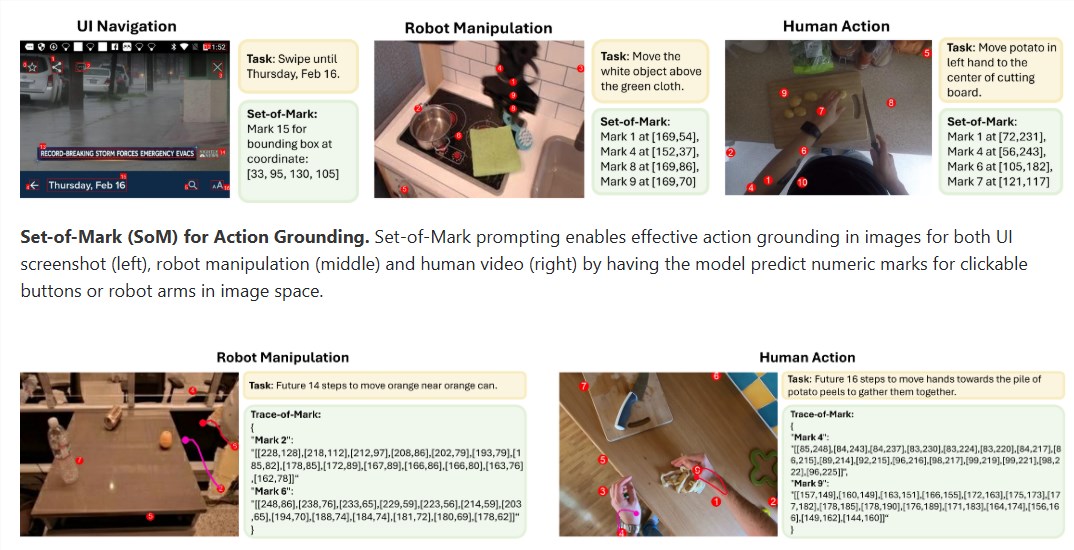

Magma not only assists users with everyday tasks like automated online shopping and weather checks but also collaborates with physical robots to execute more complex operations. For instance, during a real-life chess game, Magma provides real-time strategic advice, enhancing the gaming experience. It also features psychological prediction capabilities, anticipating the future actions of people or objects in videos, enabling virtual assistants or robots to better understand their dynamic environment and react accordingly.

According to the official introduction, Magma's applications are extensive. It can help home robots learn to organize unfamiliar items and generate step-by-step user interface navigation instructions for unfamiliar tasks for virtual assistants. These features provide users with more precise assistance and guidance when encountering new environments or tasks.

Magma is part of the Vision-Language-Action (VLA) foundation model, learning from massive amounts of publicly available visual and language data. This capability allows Magma to effectively integrate language, spatial, and temporal intelligence, providing solutions for complex tasks in both the digital and physical worlds.

The open-sourcing of Magma provides developers and researchers with a powerful tool, fostering advancements in smart assistants and home robotics. In the future, as this technology matures, we can expect to see more innovative applications based on Magma in our daily lives.

Project address: https://microsoft.github.io/Magma/