Recently, Microsoft expanded its Phi-4 family with two new models: Phi-4-multimodal and Phi-4-mini. These additions significantly enhance processing capabilities for various AI applications.

Phi-4-multimodal, Microsoft's first unified architecture model integrating voice, vision, and text processing, boasts 56 million parameters. It excels in numerous benchmark tests, outperforming many competitors, including Google's Gemini 2.0 series. Its performance is particularly noteworthy in Automatic Speech Recognition (ASR) and Speech Translation (ST) tasks, surpassing specialized models like WhisperV3 and SeamlessM4T-v2-Large. It achieved a remarkable 6.14% word error rate, securing the top spot on the Hugging Face OpenASR leaderboard.

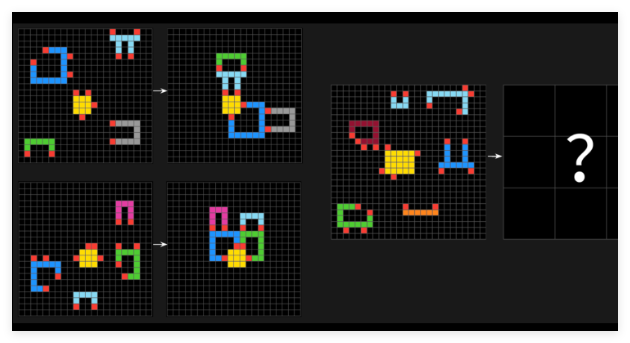

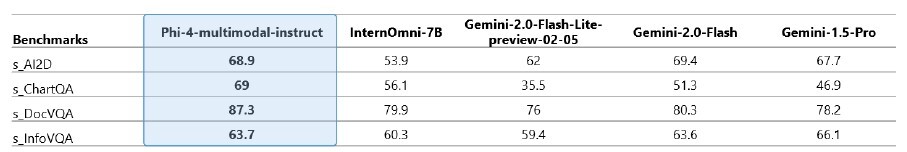

Phi-4-multimodal also demonstrates impressive visual processing capabilities. Its mathematical and scientific reasoning skills are remarkable, enabling effective understanding of documents, charts, and Optical Character Recognition (OCR). Its performance rivals, and even surpasses, popular models like Gemini-2-Flash-lite-preview and Claude-3.5-Sonnet.

The newly released Phi-4-mini model focuses on text processing tasks, featuring 38 million parameters. It demonstrates exceptional performance in text reasoning, mathematical calculations, programming, and instruction following, outperforming several popular large language models. To ensure safety and reliability, Microsoft conducted thorough testing with internal and external security experts, optimizing the models according to the Microsoft AI Red Teaming (AIRT) standards.

Both new models are deployable on various devices via ONNX Runtime, suitable for numerous low-cost and low-latency applications. They are available on Azure AI Foundry, Hugging Face, and the NVIDIA API catalog for developer use. Undoubtedly, the new Phi-4 models represent a significant advancement in efficient AI technology, opening new possibilities for future AI applications.