Have you heard of the incredibly expensive OpenAI Sora? Its training costs, easily reaching millions of dollars, make it the "Rolls-Royce" of video generation. Now, Luojian Technology has announced the open-source video generation model Open-Sora 2.0!

For a mere $200,000 (equivalent to the computational power of 224 GPUs), they successfully trained a commercial-grade video generation model with 11 billion parameters.

Performance Rivals "OpenAI Sora"

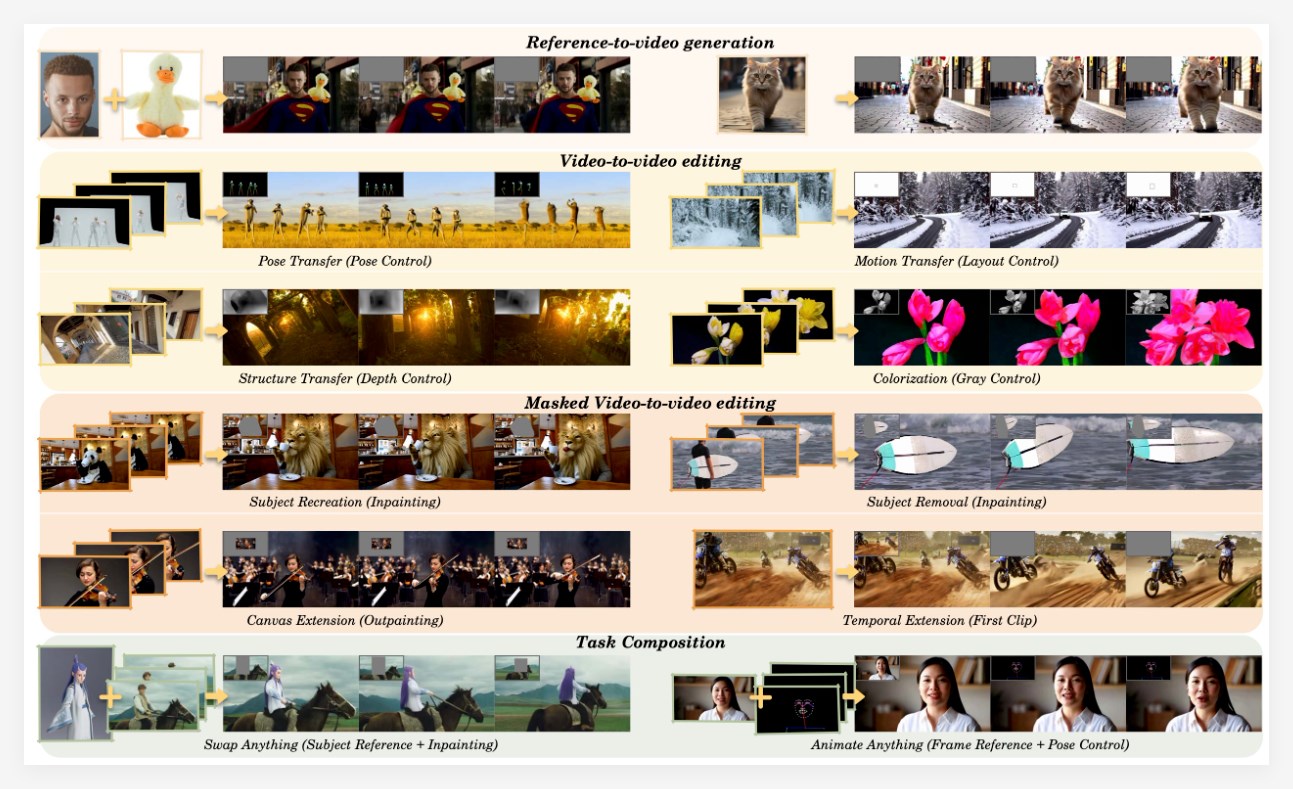

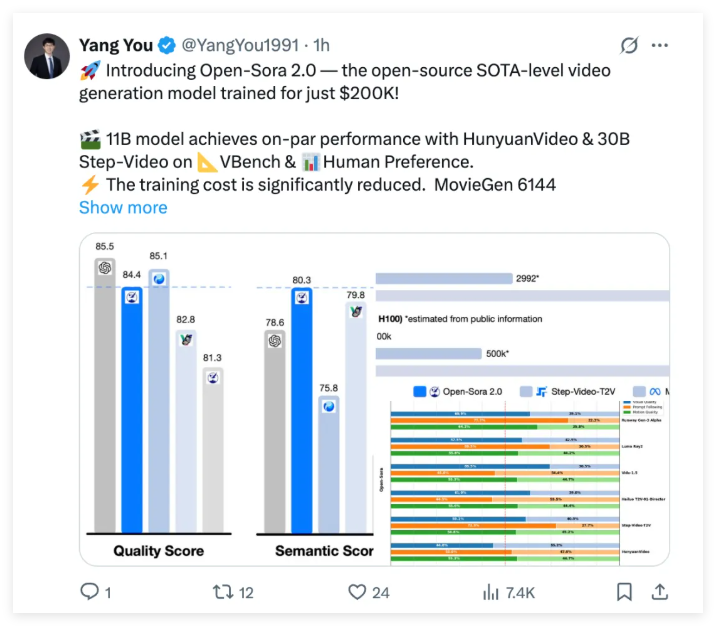

Despite its low cost, Open-Sora 2.0 is no slouch. It boldly challenges industry benchmarks like HunyuanVideo and the 30-billion-parameter Step-Video. In authoritative evaluations like VBench and user preference tests, Open-Sora 2.0's performance is impressive, matching key metrics of closed-source models trained at millions of dollars in many aspects.

Even more exciting, in VBench, the performance gap between Open-Sora 2.0 and OpenAI Sora has dramatically shrunk from 4.52% to a mere 0.69%! This is practically performance parity!

Furthermore, Open-Sora 2.0 even outscored Tencent's HunyuanVideo in VBench, demonstrating the power of innovation. It achieves higher performance at a lower cost, setting a new benchmark for open-source video generation technology!

In user preference tests, Open Sora surpasses the open-source SOTA model HunyuanVideo and commercial models like Runway Gen-3Alpha in at least two key metrics across visual presentation, text consistency, and motion performance.

The Secret to Low-Cost High Performance

You're probably wondering how Open-Sora 2.0 achieves such high performance at such a low cost. There are several key factors. First, the Open Sora team continued the design philosophy of Open-Sora 1.2, utilizing a 3D autoencoder and Flow Matching training framework. They also introduced a 3D full attention mechanism to further enhance video generation quality.

To optimize costs, Open-Sora 2.0 employed several strategies:

- Rigorous data filtering to ensure high-quality training data, improving efficiency from the source.

- Prioritizing low-resolution training to efficiently learn motion information and reduce computational costs. High-resolution training can be tens of times more expensive!

- Prioritizing image-to-video tasks to accelerate model convergence and further reduce training costs. In the inference stage, text-to-image-to-video (T2I2V) can be used for finer visual effects.

- Employing a highly efficient parallel training scheme, combined with ColossalAI and system-level optimizations, significantly improves computational resource utilization. Various "black technologies" such as efficient sequence parallelism, ZeroDP, fine-grained Gradient Checkpointing, and automatic training recovery mechanisms significantly boost training efficiency.

It's estimated that the training cost for open-source video models with over 10 billion parameters typically exceeds one million dollars. Open Sora 2.0 reduces this cost by 5-10 times. This is a boon for the video generation field, enabling more people to participate in the research and development of high-quality video generation.

Open Source Sharing, Building a Thriving Ecosystem

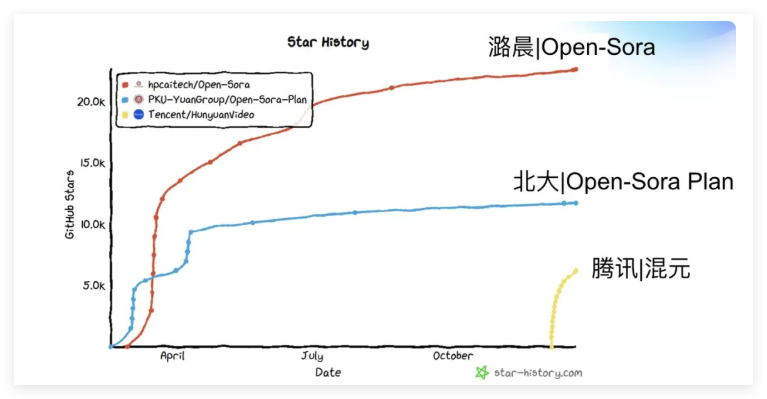

Even more commendable, Open-Sora not only open-sourced the model code and weights but also the entire training pipeline code, significantly boosting the open-source ecosystem. As third-party technology platforms have shown, Open-Sora's academic paper citations have reached nearly 100 in half a year, securing a top position in global open-source influence rankings and becoming one of the world's most influential open-source video generation projects.

The Open-Sora team is also actively exploring the application of high-compression video autoencoders to significantly reduce inference costs. They trained a high-compression (4×32×32) video autoencoder, reducing the inference time for generating a 768px, 5-second video on a single GPU from nearly 30 minutes to under 3 minutes—a 10x speed improvement! This means we can generate high-quality video content much faster in the future.

Luojian Technology's open-source video generation model, Open-Sora 2.0, with its low cost, high performance, and comprehensive open-source nature, brings a strong "budget-friendly" wind to the video generation field. Its emergence not only narrows the gap with top closed-source models but also lowers the barrier to entry for high-quality video generation, allowing more developers to participate and drive the development of video generation technology.

🔗 GitHub Open Source Repository: https://github.com/hpcaitech/Open-Sora

📄 Technical Report: https://github.com/hpcaitech/Open-Sora-Demo/blob/main/paper/Open_Sora_2_tech_report.pdf