HPC-AI Tech recently announced Open-Sora2.0, a groundbreaking video AI system achieving commercial-grade quality at approximately one-tenth the typical training cost. This advancement signifies a potential paradigm shift in the resource-intensive video AI field, mirroring efficiency gains seen in language models.

While existing high-quality video generation systems like Movie Gen and Step-Video-T2V can cost millions of dollars to train, Open-Sora2.0's training expenses totaled around $200,000. Despite this dramatic cost reduction, tests show its output quality rivals established commercial systems such as Runway Gen-3Alpha and HunyuanVideo. The system was trained using 224 Nvidia H200 GPUs.

Prompt: "Two women sit on a beige sofa in a cozy room with a brick wall backdrop. They're engaged in pleasant conversation, smiling, and raising glasses to celebrate red wine in a close-up medium shot." | Video: HPC-AI Tech

Open-Sora2.0 achieves its efficiency through a novel three-stage training process, starting with low-resolution videos and progressively refining to higher resolutions. Integrating pre-trained image models like Flux further optimizes resource utilization. At its core is a video DC-AE autoencoder, offering superior compression compared to traditional methods. This innovation translates to a remarkable 5.2x faster training speed and over 10x faster video generation speed. While the higher compression results in slightly reduced output detail, it drastically accelerates the video creation process.

Prompt: "A tomato surfs on a lettuce leaf down a ranch dressing waterfall, with exaggerated surfing moves and smooth wave effects highlighting the fun of 3D animation." | Video: HPC-AI Tech

This open-source system can generate videos from text descriptions and single images, and allows users to control the intensity of motion in the generated clips through a motion scoring function. Examples provided by HPC-AI Tech showcase a variety of scenarios, including realistic conversations and whimsical animations.

However, Open-Sora2.0 currently has limitations in resolution (768x768 pixels) and maximum video length (5 seconds or 128 frames), falling short of the capabilities of leading models like OpenAI's Sora. Nevertheless, its performance in key areas like visual quality, prompt accuracy, and motion handling is approaching commercial standards. Notably, Open-Sora2.0's VBench score now lags behind OpenAI's Sora by only 0.69%, a significant improvement from the 4.52% gap in previous versions.

Prompt: "A group of anthropomorphic mushrooms are having a disco party in a dark magical forest, with flashing neon lights and exaggerated dance moves, their smooth textures and reflective surfaces emphasizing the comical 3D look." | Video: HPC-AI Tech

Open-Sora2.0's cost-effective strategy echoes the "Deepseek moment" in language models, where improved training methods enabled open-source systems to achieve commercial-grade performance at a fraction of the cost of commercial systems. This development may put downward pressure on pricing in the video AI field, currently characterized by high computational demands and per-second pricing for services.

Training Cost Comparison: Open-Sora2.0 requires approximately $200,000, while Movie Gen requires $2.5 million, and Step-Video-T2V requires $1 million. | Image: HPC-AI Tech

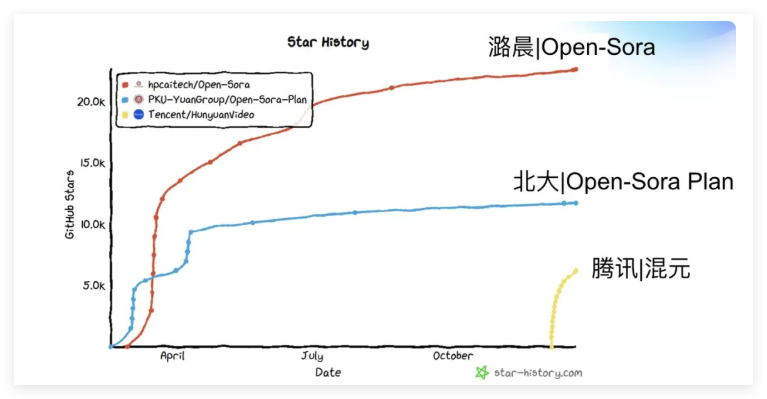

Despite this progress, the performance gap between open-source and commercial video AI remains larger than in language models, highlighting ongoing technical challenges in the field. Open-Sora2.0 is now available as an open-source project on GitHub.