Following its earlier attention-grabbing advancements in voice AI, OpenAI continues its exploration in this field. The creator of ChatGPT has launched three new, self-developed voice models: gpt-4o-transcribe, gpt-4o-mini-transcribe, and gpt-4o-mini-tts. The most notable is gpt-4o-transcribe.

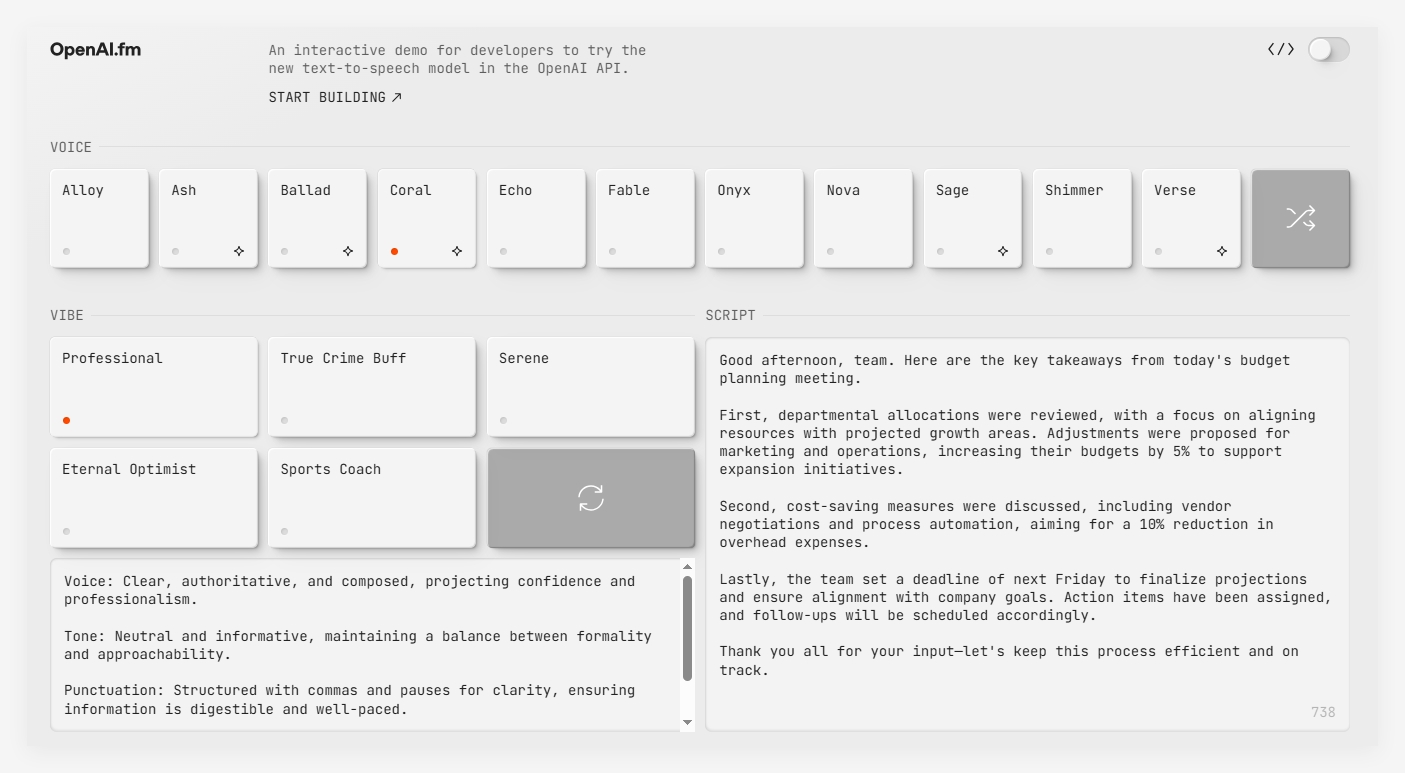

These new models are now available to third-party developers via API, enabling them to build more intelligent applications. OpenAI also offers a demo website, OpenAI.fm, for individual users to try them out.

Key Features Unveiled

So, what makes the highly anticipated gpt-4o-transcribe so special? Simply put, it's an upgrade to OpenAI's two-year-old open-source speech-to-text model, Whisper, aiming for a lower word error rate and enhanced performance.

According to OpenAI, gpt-4o-transcribe shows a significant reduction in error rate compared to Whisper across 33 industry-standard languages. In English, the error rate is impressively low at 2.46%! This is a major advancement for applications requiring high-accuracy speech transcription.

Remarkably, this new model maintains excellent performance in various challenging environments. Whether dealing with noisy surroundings, different accents, or varying speech speeds, gpt-4o-transcribe delivers more accurate transcriptions. It also supports over 100 languages.

To further enhance accuracy, gpt-4o-transcribe incorporates noise reduction and semantic speech activity detection technologies.

OpenAI engineer Jeff Harris explains that the latter helps the model identify when a speaker has finished a complete thought, preventing punctuation errors and improving overall transcription quality. Furthermore, gpt-4o-transcribe supports streaming speech-to-text, allowing developers to continuously input audio and receive real-time text results for a more natural conversational feel.

It's important to note that the gpt-4o-transcribe model family currently lacks "speaker diarization" functionality. This means it focuses on transcribing all audio (potentially containing multiple speakers) into a single text output without distinguishing or labeling individual speakers.

While this might be a limitation in scenarios requiring speaker differentiation, its advantage in improving overall transcription accuracy remains significant.

Developer First: API Access Now Open

gpt-4o-transcribe is now available to developers via the OpenAI API. This allows developers to quickly integrate this powerful speech-to-text capability into their applications, providing users with a more convenient voice interaction experience.

OpenAI's live demo showed that for applications already built on text models like GPT-4o, adding voice interaction requires only about nine lines of code. For example, e-commerce applications could quickly implement voice responses to user inquiries about order information.

However, OpenAI stated that due to the specific cost and performance requirements of ChatGPT, these new models won't be directly integrated into ChatGPT for now, but gradual integration is anticipated. For developers seeking lower latency and real-time voice interaction, OpenAI recommends using its Realtime API's speech-to-speech models.

With its powerful speech transcription capabilities, gpt-4o-transcribe is poised to excel in various fields. OpenAI suggests applications in customer call centers, automatic meeting minute generation, and AI-powered smart assistants. Companies that have already tested the new model report significant improvements in voice AI performance.

Of course, OpenAI faces competition from other voice AI companies. For example, ElevenLabs' Scribe model also boasts a low error rate and speaker diarization. Hume AI's Octave TTS model offers more granular customization options for pronunciation and emotional control. The open-source community also continues to produce advanced voice models.

OpenAI's release of gpt-4o-transcribe and other new voice models demonstrates significant strength and potential in the speech transcription field. While currently developer-focused, its value in enhancing voice interaction experiences is undeniable. As technology advances, we can expect even more exciting voice AI applications to emerge.

Website: https://www.openai.fm/