A 12th-grade student has created an innovative platform allowing people to evaluate the performance of different AI models in Minecraft creations, offering a fresh perspective on AI benchmarking.

A Novel Benchmarking Approach Addresses Limitations of Traditional Methods

As the limitations of traditional AI benchmarking methods become increasingly apparent, developers are seeking more creative evaluation avenues. For a group of developers, Microsoft's sandbox building game, Minecraft, has proven to be an ideal choice.

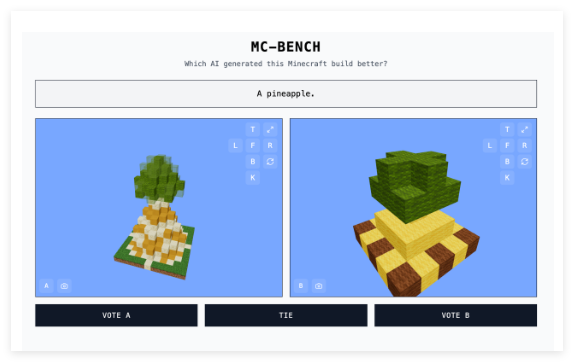

High school student Adi Singh, along with his team, developed the Minecraft Benchmark (MC-Bench) website, which allows AI models to compete head-to-head in challenges, responding to various prompts through Minecraft creations. Users can vote on which model performed better; the AI creator behind each creation is only revealed after voting.

Singh chose Minecraft as the testing platform due to its widespread recognition – as one of the best-selling video games of all time, even those unfamiliar with it can intuitively judge which blocky pineapple is superior.

"Minecraft makes it easier for people to see the progress in AI development," Singh told TechCrunch. "People are already familiar with Minecraft, its look, and feel."

Major AI Companies Support the Project

MC-Bench currently has 8 volunteers. According to the website, Anthropic, Google, OpenAI, and Alibaba have provided subsidies to the project, allowing the use of their products to run benchmarks, but these companies have no other affiliation with the project.

Singh shared his vision for the project's future: "Currently, we're just doing simple builds, reflecting on how far we've come compared to the GPT-3 era, but we plan to expand to long-term planning and goal-oriented tasks. The game might just be a medium to test agent reasoning; it's safer than real life and more controllable in testing, which is ideal in my opinion."

Besides Minecraft, games like Pokémon Red, Street Fighter, and Pictionary have also been used as AI experimentation benchmarks, partly because AI benchmarking itself is incredibly challenging.

Intuitive Evaluation Replaces Complex Metrics

Researchers typically test AI models on standardized evaluations, but these tests often give AI models an unfair advantage. Due to their training, models are inherently good at certain types of problems, especially those involving memorization or basic reasoning.

This contradiction is evident in several cases: OpenAI's GPT-4 can score 88% on the LSAT but can't accurately count the number of "R"s in the word "strawberry"; Anthropic's Claude 3.7Sonnet achieves 62.3% accuracy on standardized software engineering benchmarks but performs worse than most five-year-olds at playing Pokémon.

Technically, MC-Bench is a programming benchmark requiring models to write code to create specified builds, such as "Frosty the Snowman" or "a charming tropical beach hut on a pristine beach." But for most users, evaluating the appearance of the snowman is more intuitive than deep code analysis, giving the project broader appeal and the potential to gather more data on model performance.

While the impact of these scores on AI practicality remains to be seen, Singh believes it's a strong signal: "The current leaderboard is very close to my own experience using these models, unlike many purely text-based benchmarks. Maybe MC-Bench can help companies understand if they are heading in the right direction."