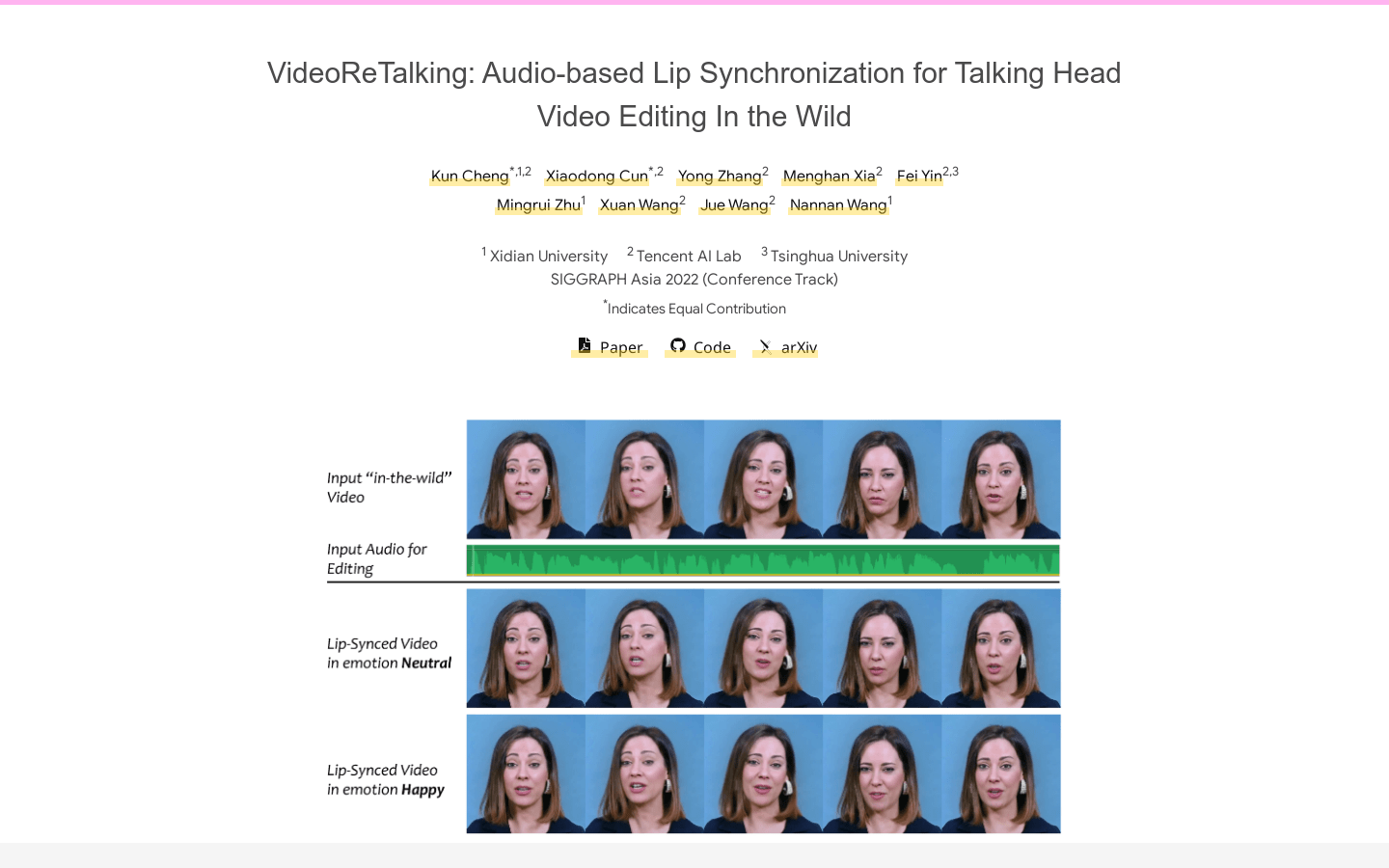

VideoReTalking

Audio-driven video editing for high-quality lip-sync synchronization.

VideoReTalking Visit Over Time

Monthly Visits

No Data

Bounce Rate

No Data

Page per Visit

No Data

Visit Duration

No Data

VideoReTalking Visit Trend

No Visits Data

VideoReTalking Visit Geography

No Geography Data

VideoReTalking Traffic Sources

No Traffic Sources Data