Recently, Google announced the launch of a new model named "gemma-2-2b-jpn-it," the latest addition to its Gemma series of language models. This model is specifically optimized for the Japanese language, demonstrating Google's continuous commitment in the field of large language models (LLM).

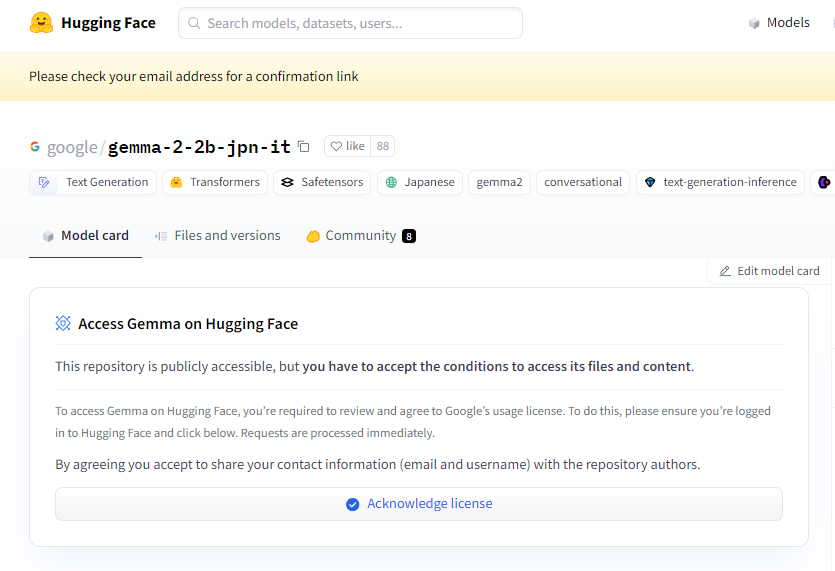

Project Entry: https://huggingface.co/google/gemma-2-2b-jpn-it

Gemma-2-2b-jpn-it is a text-to-text decoder-type large language model with open weights, meaning it is publicly accessible and can be fine-tuned for various text generation tasks such as question answering, summarization, and reasoning.

This new model boasts 2.61 billion parameters and uses BF16 tensor types. It is designed based on Google's Gemini series model architecture, equipped with advanced technical documentation and resources, allowing developers to easily integrate it into various applications using inference. Notably, this model is compatible with Google's latest TPU hardware, specifically TPUv5p, which provides powerful computational capabilities, significantly faster training speeds, and better performance compared to traditional CPU infrastructure.

In terms of software, gemma-2-2b-jpn-it is trained using the JAX and ML Pathways frameworks. JAX is specially optimized for high-performance machine learning applications, while ML Pathways provides a flexible platform to organize the entire training process. This combination enables Google to achieve an efficient training workflow.

With the release of gemma-2-2b-jpn-it, its potential applications in multiple fields have garnered widespread attention. The model excels in content creation and communication, capable of generating poetry, scripts, code, marketing copy, and even chatbot responses. Its text generation capabilities are also suitable for summarization tasks, condensing large amounts of text into concise summaries, making it ideal for research, education, and knowledge exploration.

However, there are some limitations to gemma-2-2b-jpn-it that users need to be aware of. The model's performance depends on the diversity and quality of its training data; biases or omissions in the data may affect the model's responses. Additionally, as large language models do not have built-in knowledge bases, they may generate inaccurate or outdated factual statements when handling complex queries.

During development, Google also placed a strong emphasis on ethical considerations, conducting rigorous evaluations of gemma-2-2b-jpn-it to address issues related to content safety, representational harm, and training data memory. Google has implemented filtering technologies to exclude harmful content and established a transparent and accountable framework, encouraging developers to continuously monitor and adopt privacy protection technologies to ensure compliance with data privacy regulations.

Key Points:

🌟 Google's gemma-2-2b-jpn-it model is optimized for the Japanese language, featuring 2.61 billion parameters and advanced technical architecture.

💡 The model has broad application potential in content creation, natural language processing, and supports various text generation tasks.

🔒 Google emphasizes ethical considerations in model development, implementing content safety filters and privacy protection measures to mitigate risks.