In today's artificial intelligence field, training models involves not just designing better architectures but also effectively managing data. Modern AI models require vast amounts of data, and this data must be delivered quickly to GPUs and other accelerators.

However, traditional data loading systems often fail to meet this demand, leading to idle GPUs, extended training times, and increased costs. This issue becomes particularly pronounced when scaling or handling multiple data types is necessary.

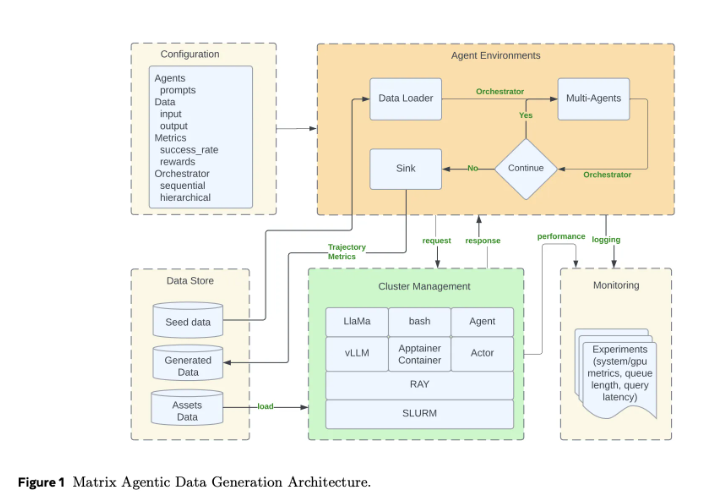

To address these challenges, Meta AI has developed SPDL (Scalable and Efficient Data Loading), a tool designed to improve the transfer of training data for AI. SPDL employs a thread-based loading approach, which differs from traditional process-based methods, significantly enhancing data transfer speeds. Whether extracting data from the cloud or local storage systems, SPDL can seamlessly integrate into training workflows.

SPDL is designed with scalability in mind, capable of running on distributed systems, thus supporting both single GPU training and large-scale cluster training. It is compatible with widely used AI frameworks such as PyTorch, lowering the barrier for teams to adopt it. Additionally, as an open-source tool, anyone can utilize it or contribute to its improvements.

The core innovation of SPDL lies in its thread architecture. By using threads instead of processes, SPDL avoids the common communication overhead found in traditional data transfers. It also employs smart techniques like prefetching and caching to ensure that GPUs always have access to prepared data, reducing idle time and improving overall system efficiency.

The benefits of SPDL include:

1. Faster data transfer speeds: It can quickly deliver data to GPUs, avoiding delays caused by slow transfers.

2. Shorter training times: Keeping GPUs busy helps to reduce the overall training cycle.

3. Lower costs: By improving efficiency, it reduces the computational costs associated with training.

Meta AI has conducted extensive benchmarking, revealing that SPDL achieves a 3-5 times increase in data throughput compared to traditional data loaders. This means that for large AI models, training time can be reduced by up to 30%. SPDL is particularly suited for handling high-throughput data streams, excelling in real-time processing or frequent model update scenarios. Currently, Meta is applying SPDL in its Reality Labs, involving projects in augmented reality and virtual reality.

As the demand for AI systems continues to grow, tools like SPDL will be crucial for maintaining efficient infrastructure. By alleviating data bottlenecks, SPDL not only enhances training efficiency but also opens doors to new research possibilities.

Details: https://ai.meta.com/blog/spdl-faster-ai-model-training-with-thread-based-data-loading-reality-labs/

Code access: https://github.com/facebookresearch/spdl

Key Points:

✅ ** Improved Data Transfer Efficiency **: SPDL uses thread-based loading, significantly accelerating data transfer speeds.

✅ ** Reduced Training Time **: Compared to traditional methods, training time can be shortened by up to 30%.

✅ ** Open Source Tool **: As an open-source project, anyone can use and contribute to its improvement.