With the continuous development of artificial intelligence technology, the integration of visual and textual data has become a complex challenge. Traditional models often struggle to accurately parse structured visual documents such as tables, charts, infographics, and diagrams. This limitation affects automatic content extraction and comprehension, thereby impacting applications in data analysis, information retrieval, and decision-making. In response to this demand, IBM recently released Granite-Vision-3.1-2B, a small visual language model specifically designed for document understanding.

Granite-Vision-3.1-2B can extract content from various visual formats, including tables, charts, and diagrams. The model is trained on a carefully selected dataset sourced from both public and synthetic sources, enabling it to handle a variety of document-related tasks. As an improved version of the Granite large language model, it integrates both image and text modalities, enhancing the model's interpretive capabilities for a range of practical applications.

The model consists of three key components: first, a visual encoder that efficiently processes and encodes visual data using SigLIP; second, a visual-language connector, which is a dual-layer multi-layer perceptron (MLP) with a GELU activation function designed to link visual information with textual information; and finally, a large language model based on Granite-3.1-2B-Instruct, featuring a context length of 128k, capable of handling complex and large inputs.

During training, Granite-Vision-3.1-2B drew inspiration from LlaVA and incorporated features from multi-layer encoders, along with a denser grid resolution in AnyRes. These improvements enhance the model's ability to understand detailed visual content, allowing it to perform visual document tasks more accurately, such as analyzing tables and charts, conducting optical character recognition (OCR), and answering document-based queries.

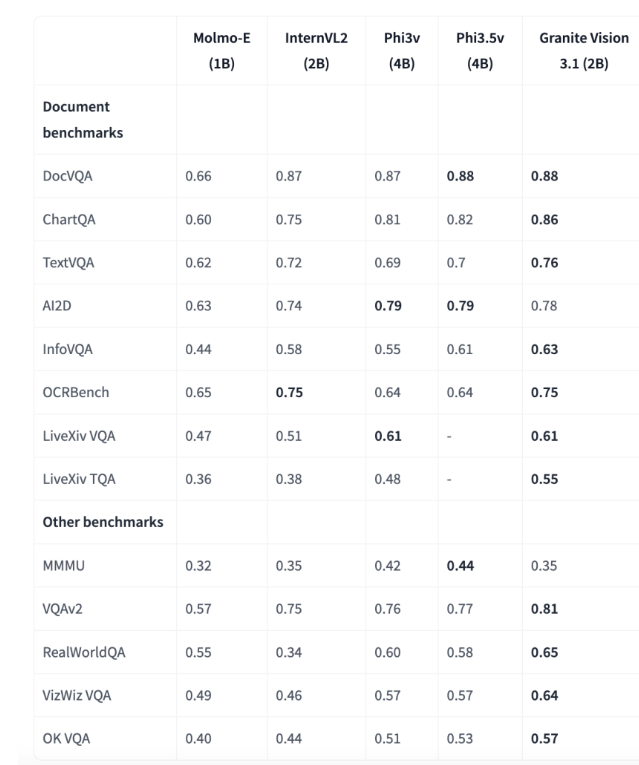

Evaluation results show that Granite-Vision-3.1-2B performs excellently across multiple benchmarks, particularly in document understanding. In the ChartQA benchmark, the model scored 0.86, surpassing other models with parameters in the 1B-4B range. In the TextVQA benchmark, it achieved a score of 0.76, demonstrating strong capabilities in parsing and answering embedded textual information within images. These results highlight the model's potential for precise visual and textual data processing in enterprise applications.

IBM's Granite-Vision-3.1-2B represents a significant advancement in visual language models, offering a balanced solution for visual document understanding. Its architecture and training methods enable efficient parsing and analysis of complex visual and textual data. With its native support for transformers and vLLM, the model can adapt to various use cases and can be deployed in cloud environments like Colab T4, providing researchers and professionals with a practical tool to enhance AI-driven document processing capabilities.

Model: https://huggingface.co/ibm-granite/granite-vision-3.1-2b-preview

Key Points:

🌟 Granite-Vision-3.1-2B is a small visual language model launched by IBM, specifically designed for document understanding, capable of extracting content from various visual formats.

📊 The model consists of a visual encoder, a visual-language connector, and a large language model, enhancing its understanding of complex inputs.

🏆 It performs exceptionally well in multiple benchmarks, especially in the field of document understanding, showcasing strong potential for enterprise applications.